This weeks VMTN podcast is about Homelabs. John Troyer asked on twitter who had a homelab and if they already posted an article about it. Most bloggers already did but I never got to it. Weird thing is that the common theme for most virtualization bloggers seems to be physical! Take a look at what some of these guys have in their home lab and try to imagine the associated cost in terms of cooling, power but also the noise associated with it.

- Jason Boche – EMC Celerra NS-120

- Chad Sakac – Building a home lab (check the storage he has at home!)

- Gabe – White box ESX home lab

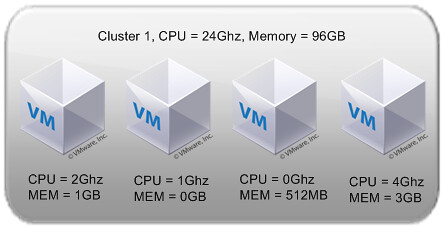

I decided to take a completely different route. Why buy three or four servers when you can run all your ESX hosts virtually on a single desktop. Okay, I must admit, it is a desktop on steroids but it does save me a lot of (rack)space, noise, heat and of course electricity. Here are the core components of which my Desktop consists:

- Asustek P6T WS Pro

- Intel Core i7-920

- 6 x 2GB Kingston 1333Mhz

- 2 x Seagate Cheetah SAS 15k6 in RAID-0

I also have two NAS devices on which I have multiple iSCSI LUNs and NFS shares. I even have replication going on between the two devices! Works like a charm.

- 2 x Iomega IX4-200d

There’s one crucial part missing. On my laptop I use VMware Player but on my desktop I like to use VMware Workstation. Although VMware Player might just work fine, I like to have a bit more functionality at my disposal like teaming for instance.

That’s my lab. I installed 3 x ESXi 4.0 Update 1 in a VM and installed Windows 2008 in a VM with vCenter 4.0 Update 1. Attached the ESX hosts to the iSCSI LUNs and NFS Shares and off we go. Single box lab!