I received a question last week from a former colleague around vCenter Memory Metrics. There are a couple of places where memory details are shown on a “VM level” within the vCenter client. The first tab that we will discuss is the Summary tab. It shows “General” and “Resources”. It appears that there is a lot of confusion around this topic and that probably comes from the fact that some of the Performance Metrics are named similarly but don’t always refer to the same.

Lets start with “General”:

In the screenshot above you can see 2 fields related to memory:

- Memory (2048MB)

- Memory Overhead (110.63MB)

The first one, Memory, is an easy one. This is the amount of memory you provisioned your VM with, in this case 2048MB. The second field is Memory Overhead. Memory Overhead is the amount of memory the VMkernel thinks it will need to run the virtualized workload, in this case 110.63MB. This typicall would include things like page tables, frame buffers etc.

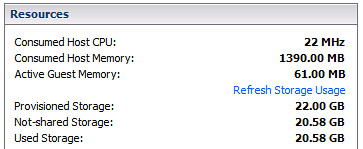

That brings us to the Resources sections:

This section shows again two fields related to memory:

- Consumed Host Memory (1390.00MB)

- Active Guest Memory (61.00MB)

Consumed and Active is where it becomes a bit less obvious but again it isn’t rocket science. Consumed Host Memory is the amount of physical memory that has been allocated to the virtual machine. This also includes things like memory overhead, that also means that Consumed can be larger than what has been provisioned. To make it a bit more complex it should be noted that in the “Performance Tab” the “Consumed” Counter doesn’t actually include Memory Overhead!

Active Memory more or less already explains it, it is what the VMkernel believes is currently being actively used by the VM. Now it should be pointed out here that this is an estimate calculated by a form of statistical sampling.

The second tab that contains details around memory is “Resource Allocation”. Looking at the tab I guess it is obvious that this one contains more details and is more complex than the summary tab:

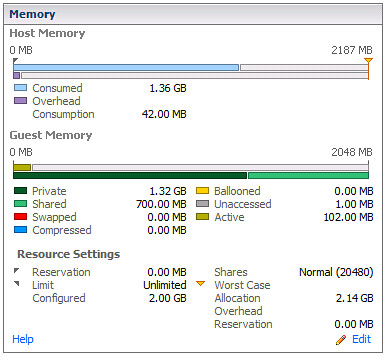

The Memory section contains three sub-sections and I have carved them up as such:

The first section is the host memory:

- Consumed (1.36GB)

- Overhead Consumption (42.00MB)

Again, Consumed is the amount of machine memory currently allocated to the VM. In other words, out of the 2GB provisioned currently 1.36GB is being consumed by that VM. The Overhead Consumption is the amount of memory being consumed for the virtualization overhead, as you can see it is less than what the VMkernel expected to use as mentioned in the first screenshot. I guess you could do the math easily:

Consumed = Private + Overhead Consumption

Guest Memory

- Private (1.32GB)

- Shared (700.00MB)

- Swapped (0.00MB)

- Compressed (0.00MB)

- Ballooned (0.00MB)

- Unaccessed (1.00MB)

- Active (102.00MB)

This is the part where it gets slightly more complicated. Private is the amount of memory that is physically backed by the Host. In other words 1.32GB is physically stored. Shared is the total amount of memory shared by TPS. Swapped, Compressed and Ballooned speak for itself in my opinion but lets be absolutely clear here. Swapped the amount of memory reclaimed by VMkernel swapping, Compressed is the amount of memory stored in the VMs compression cache and Ballooned is the amount of memory reclaimed by the Balloon Driver. Ultimately all of these should be 0.

There’s one which I couldn’t really explain which is Unaccessed. The documentation describes it as “the amount of memory that has never been referenced by the guest”. Active is the amount of memory actively used, again it is an estimate done by statistical sampling. (Did you notice it changed from 61MB to 102MB.)

The last section is Resource Settings, I guess most are obvious (like Reservation, Limit, Configured, Shares) but the two that might not be are:

- Worst Case Allocation (2.14GB)

- Overhead Reservation (0.00MB)

Worst Case Allocation is the amount of memory that the virtual machine can allocate when ALL virtual machines consume the full amount of allocated resources. Basically when there is severe overcommitment this is what the VM will get in the worst possible case. This is also one of the key metrics to keep an eye on in my opinion. Especially when you are over-committing and over-provisioning your systems this metric will be a key-indicator. Overhead Reservation is the amount of Overhead Memory reserved, not much to say about that one.