Cormac and I have been busy the past couple of weeks updating the vSAN Deep Dive to 7.0 U3. Yes, there is a lot to update and add, but we are actually going through it at a surprisingly rapid pace. I guess it helps that we had already written dozens of blog posts on the various topics we need to update or add. One of those topics is “witness failure resilience” which was introduced in vSAN 7.0 U3. I have discussed it before on this blog (here and here) but I wanted to share some of the findings with you folks as well before the book is published. (No, I do not know when the book will be available on Amazon just yet!)

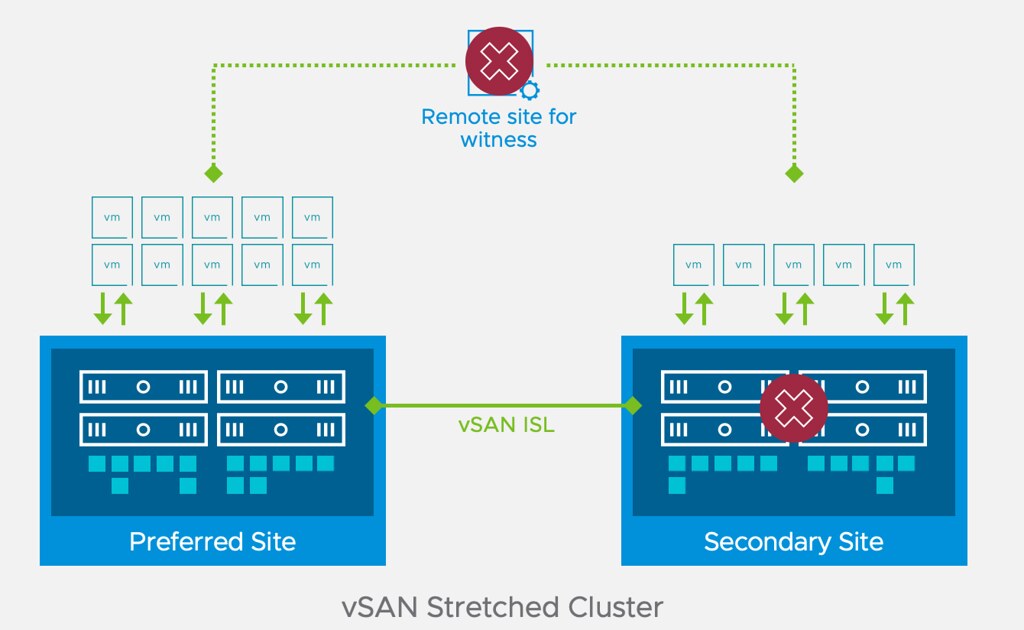

In the scenario below, we failed the secondary site of our stretched cluster completely. We can examine the impact of this failure through RVC on vCenter Server. This will provide us with a better understanding of the situation and how the witness failure resilience mechanism actually works. Note that the below output has been truncated for readability reasons. Let’s take a look at the output of RVC (vsan.vm_object_info) for our VM directly after the failure.

VM R1-R1: Disk backing: [vsanDatastore] 0b013262-0c30-a8c4-a043-005056968de9/R1-R1.vmx RAID_1 RAID_1 Component: 0b013262-c2da-84c5-1eee-005056968de9 , host: 10.202.25.221 votes: 1, usage: 0.1 GB, proxy component: false) Component: 0b013262-3acf-88c5-a7ff-005056968de9 , host: 10.202.25.201 votes: 1, usage: 0.1 GB, proxy component: false) RAID_1 Component: 0b013262-a687-8bc5-7d63-005056968de9 , host: 10.202.25.238 votes: 1, usage: 0.1 GB, proxy component: true) Component: 0b013262-3cef-8dc5-9cc1-005056968de9 , host: 10.202.25.236 votes: 1, usage: 0.1 GB, proxy component: true) Witness: 0b013262-4aa2-90c5-9504-005056968de9 , host: 10.202.25.231 votes: 3, usage: 0.0 GB, proxy component: false) Witness: 47123362-c8ae-5aa4-dd53-005056962c93 , host: 10.202.25.214 votes: 1, usage: 0.0 GB, proxy component: false) Witness: 0b013262-5616-95c5-8b52-005056968de9 , host: 10.202.25.228 votes: 1, usage: 0.0 GB, proxy component: false)

As can be seen, the witness component holds 3 votes, the components on the failed site (secondary) hold 2 votes, and the components on the surviving data site (preferred) hold 2 votes. After the full site failure has been detected, the votes are recalculated to ensure that a witness host failure does not impact the availability of the VMs. Below shows the output of RVC once again.

VM R1-R1: Disk backing: [vsanDatastore] 0b013262-0c30-a8c4-a043-005056968de9/R1-R1.vmx RAID_1 RAID_1 Component: 0b013262-c2da-84c5-1eee-005056968de9 , host: 10.202.25.221 votes: 3, usage: 0.1 GB, proxy component: false) Component: 0b013262-3acf-88c5-a7ff-005056968de9 , host: 10.202.25.201 votes: 3, usage: 0.1 GB, proxy component: false) RAID_1 Component: 0b013262-a687-8bc5-7d63-005056968de9 , host: 10.202.25.238 votes: 1, usage: 0.1 GB, proxy component: false) Component: 0b013262-3cef-8dc5-9cc1-005056968de9 , host: 10.202.25.236 votes: 1, usage: 0.1 GB, proxy component: false) Witness: 0b013262-4aa2-90c5-9504-005056968de9 , host: 10.202.25.231 votes: 1, usage: 0.0 GB, proxy component: false) Witness: 47123362-c8ae-5aa4-dd53-005056962c93 , host: 10.202.25.214 votes: 3, usage: 0.0 GB, proxy component: false)

As can be seen, the votes for the various components have changed, the data site now has 3 votes per component instead of 1, the witness on the witness host went from 3 votes to 1, and on top of that, the witness that is stored in the surviving fault domain now also has 3 votes instead of 1 vote. This now results in a situation where quorum would not be lost even if the witness component on the witness host is impacted by a failure. A very useful enhancement to vSAN 7.0 Update 3 for stretched cluster configurations if you ask me.