I have written a few articles about vSAN Data Protection now, and my last article featured a nice vSAN DP demo. A very good question was asked in the comment section, and it was about vSAN Stretched Clusters. Basically, the question was whether Snapshots are also stretched across locations. This is a great question, as there are a couple of things which are probably worth explaining again.

vSAN Data Protection relies on the snapshot capability which was introduced with vSAN ESA. This snapshot capability in vSAN ESA is significantly different than with vSAN OSA or with VMFS. With vSAN OSA and VMFS when you create a snapshot a new object (vSAN) or file (VMFS) is created. With vSAN ESA this is no longer the case as we don’t create additional files or objects, but we create a copy of the metadata structure instead. This is why vSAN ESA snapshots perform much better than vSAN OSA or VMFS snapshots do, as we no longer need to traverse multiple files or objects to read data. We can simply use the same object, and leverage the metadata structure to keep track of what has changed.

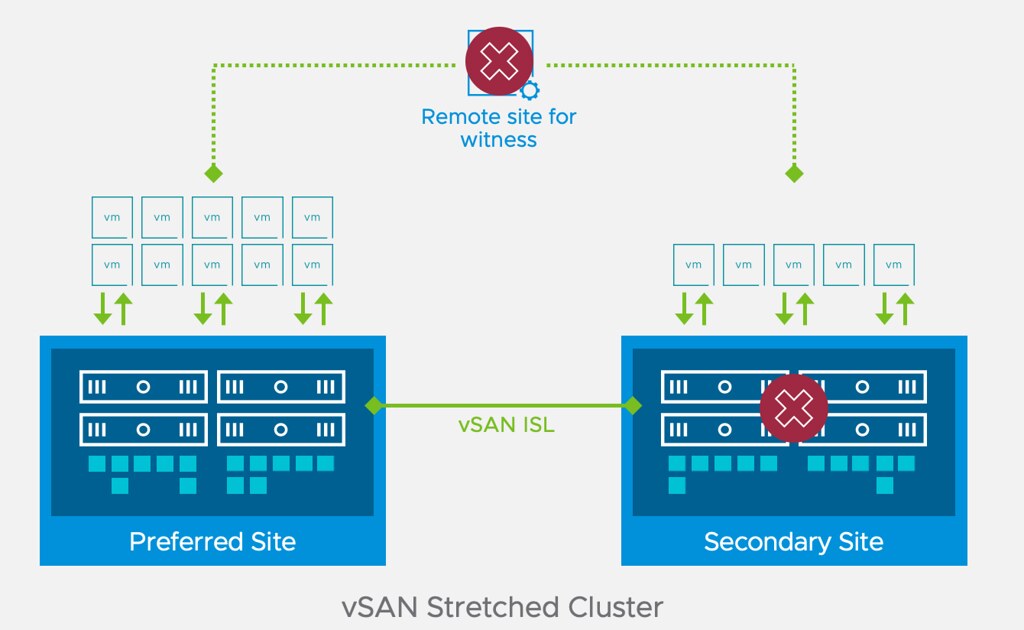

Now, with vSAN, as most of you hopefully know, object (and it’s components) are placed across the cluster based on what is specified within the storage policy that is associated with the object or VM. In other words, if the policy states FTT=1 and RAID-1, then you will see 2 copies of the data. If the policy states the data needs to be stretched across locations, and within each location be protected with RAID-5, then you will see a RAID-1 configuration across sites and a RAID-5 configuration within each site. As vSAN ESA snapshots are an integral part of the object, the snapshots automatically follow all requirements as defined within the policy. In other words, if the policy says stretched then the snapshot will also automatically be stretched.

There is one caveat I want to call out, and for that I want to show a diagram. The diagram below shows the Data Protection Appliance, aka the snapshot manager appliance. As you can see, it states “metadata decoupled from appliance” and it links somehow to a global namespace object. This global namespace object is where all the details of the protected VMs (and more) is being stored. As you can imagine, both the Snapshot Manager, as well as the Global Namespace object should also be stretched. For the global namespace object this means that you need to ensure that the default datastore policy is set to “stretched”, and of course for the snapshot manager appliance you can simply select the correct policy when provisioning the appliance. Either way, make sure the default datastore policy aligns with the disaster recovery and data protection policy.

I hope this helps those exploring vSAN Data Protection in a stretched cluster configuration!