I was playing around with vShield App and I locked out my vCenter VM which happened to be hosted on the cluster which was protected by vShield App. Yes I know that it is not recommended, but I have a limited amount of compute resources in my lab and I can’t spare a full server just for vCenter so I figured I would try it anyway and by breaking stuff I learn a lot more.

I wanted to know what happened when my vShield App virtual machine would fail. So I killed it and of course I couldn’t reach vCenter anymore. The reason for this being is the fact that a so-called dvfilter is used. The dvfilter basically captures the traffic, sends it to the vShield App VM which inspects it and then sends it to the VM (or not depending on the rules). As I killed my vShield App VM there was no way it would work. If I would have had my vCenter available I would just vMotion the VMs to another host and the problem would be solved, but it was my vCenter which was impacted by this issue. Before I started digging myself I did a quick google and I noticed this post by vTexan. He had locked himself out by creating strict rules, but my scenario was different. What were my options?

Well there are multiple options of course:

- Move the VM to an unprotected host

- Disarm the VM

- Uninstall vShield

As I did not have an unprotected host in my cluster and did not want to uninstall vShield I had only 1 option left. I figured it couldn’t be too difficult and it actually wasn’t:

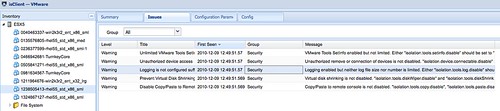

- Connect your vSphere Client to the ESXi host which is running vCenter

- Power Off the vCenter VM

- Right click the vCenter VM and go to “Edit Settings”

- Go to the Options tab and click General under Advanced

- Click Configuration Parameters

- Look for the “ethernet0.filter0” entries and remove both values

- Click Ok, Ok and power on your vCenter VM

As soon as your vCenter VM is booted you should have access to vCenter again. Isn’t that cool? What would happen if your vShield App would return? Would this vCenter VM be left unprotected? No it wouldn’t, vShield App would actually notice it is not protected and add the correct filter details again so that the vCenter VM will be protected. If you want to speed this process up you could of course also vMotion the VM to a host which is protected. Now keep in mind that while you do the vMotion it will insert the filter again which could cause the vCenter VM to disconnect. In all my tests so far it would reconnect at some point, but that is no guarantee of course.

Tomorrow I am going to apply a security policy which will lock out my vCenter Server and try to recover from that… I’ll keep you posted.

** Disclaimer: This is for educational purposes, please don’t try this at home… **