I have written multiple articles(1, 2, 3, 4) on this topic so hopefully by now everyone knows that Large Pages are not shared by TPS. However when there is contention the large pages will be broken up in small pages and those will be shared based on the outcome of the TPS algorythm. Something I have always wondered and discussed with the developers a while back is if it would be possible to have an indication of how many pages can possibly be shared when Large Pages would be broken down. (Please note that we are talking about Small Pages backed by Large Pages here.) Unfortunately there was no option to reveal this back then.

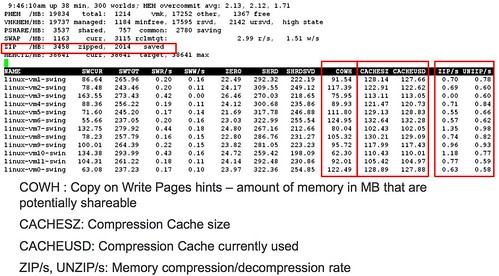

While watching the VMworld esxtop session “Troubleshooting using ESXTOP for Advanced Users, TA6720” I noticed something really cool. Which is shown in the quote and the screenshot below which is taken from the session. Other new metrics that were revealed in this session and shown in this screenshot are around Memory Compression. I guess the screenshot speaks for itself.

- COWH : Copy on Write Pages hints – amount of memory in MB that are potentially shareable,

- Potentially shareable which are not shared. for instance when large pages are used, this is a good hint!!

There was more cool stuff in this session that I will be posting about this week, or at least adding to my esxtop page for completeness.