I have written multiple articles(1, 2, 3, 4) on this topic so hopefully by now everyone knows that Large Pages are not shared by TPS. However when there is contention the large pages will be broken up in small pages and those will be shared based on the outcome of the TPS algorythm. Something I have always wondered and discussed with the developers a while back is if it would be possible to have an indication of how many pages can possibly be shared when Large Pages would be broken down. (Please note that we are talking about Small Pages backed by Large Pages here.) Unfortunately there was no option to reveal this back then.

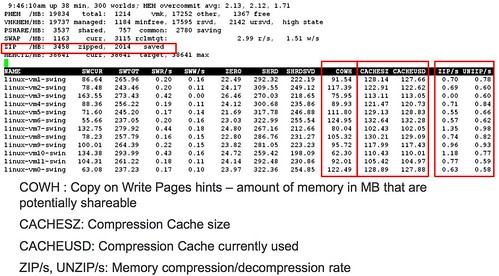

While watching the VMworld esxtop session “Troubleshooting using ESXTOP for Advanced Users, TA6720” I noticed something really cool. Which is shown in the quote and the screenshot below which is taken from the session. Other new metrics that were revealed in this session and shown in this screenshot are around Memory Compression. I guess the screenshot speaks for itself.

- COWH : Copy on Write Pages hints – amount of memory in MB that are potentially shareable,

- Potentially shareable which are not shared. for instance when large pages are used, this is a good hint!!

There was more cool stuff in this session that I will be posting about this week, or at least adding to my esxtop page for completeness.

It looks like when you hit 100% memory allocation on Nehalem host, and large pages are being broken into 4k pages to allow TPS to work, ESX won’t ever revert back to large pages until you reboot that host. Actually now that I think of this I don’t remember checking if evacuating all VMs off and back would be enough.

I would really like to know how much there is a performance penalty on Nehalem CPU using standard 4k pages?

First, I want to say that you always do a thorough job of explaining these things and as a primary role as being the SME for support I find this kind of information the “steak dinner” to my appetite of knowledge – anyways. We have been meeting with the engineering team and recently heard that they will be disabling “LPE” – my guess is that the engineer may of been talking about LPS as I have not found any reference to this anywhere. He also stated that they would be looking at relcaiming up to 30% of their current memory (These are Nahalem host and this has been a hot topic). Now they also did this on a few other clusters and I didn’t see any improvement at all. They dont seem to be to concerned about the host ever coming to memory contraints. I also had a colleague tell me about enabling “LPS” in the windows guest – which I have yet to look into. It seems that this is a decisions based on trade-offs depending on what you are willing to sacrifice and gain….

It looks like TPS is doing some sharing with large memory pages. With the host enabled for large pages (Mem.AllocGuestLargePage = 1), not under any memory pressure, and the VM guest supporting large pages and enabled by default (Windows 2008 R2), on a few of our machines we do see some memory sharing being reported. This doesn’t make sense if TPS doesn’t even look at large pages.

I have never seen this. Only things I have seen being shared are zero pages to be honest.

When you say zero pages, are you referring to blocks of memory that have been zero’ed out? Like what Windows 2008 does to all of it’s memory during boot? Maybe that’s what we are seeing because it is usually when a machine is has been recently powered on and has not seen much activity yet.

Yeah, I’m betting it’s zero pages as well – at least that’s what I’d expect from prior experiences. You can see zero pages for a VM right in vCenter if you check the box for it while looking at memory performance graphs.

Duncan talks more about zero pages and TPS here: http://www.yellow-bricks.com/2011/01/10/how-cool-is-tps/