I got a question after the previous demo: what would happen if, after a Site Takeover, the two failed sites came back online again? I completely ignored this part of the scenario so far, I am not even sure why. I knew what would happen, but I wanted to test it anyway to confirm that what engineering had described actually happened. For those who cannot be bothered to watch a demo, what happens when the two failed sites come back online again is pretty straightforward. The “old” components of the impacted VMs are discarded, vSAN will recreate the RAID configuration as specified within the associated vSAN Storage Policy, and then a full resync will occur so that the VM is compliant again with the policy. Let me repeat one part: a full resync will occur! So if you do a Site Takeover, I hope you do understand what the impact will be. A full resync will take time, of course, depending on the connection between the data locations.

stretched

Can I disable the vSAN service if the cluster is running production workloads?

I just had a discussion with someone who had to disable the vSAN service, while the cluster was running a production workload. They had all their VMs running on 3rd party storage, so vSAN was empty, but when they went to the vSAN Configuration UI the “Turn Off” option was grayed out. The reason this option is grayed out is that vSphere HA was enabled. This is usually the case for most customers. (Probably 99.9%.) If you need to turn off vSAN, make sure to temporarily disable vSphere HA first, and of course enable it again after you turned off vSAN! This ensures that HA is reconfigured to use the Management Network instead of the vSAN Network.

Another thing to consider, it could be that you manually configured the “HA Isolation Address” for the vSAN Network, make sure to also change that to an IP address on the Management Network again. Lastly, if there’s still anything stored on vSAN, this will be inaccessible when you disable the vSAN service. Of course, if nothing is running on vSAN, then there will be no impact to the workload.

Unexplored Territory Episode 088 – Stretching VMware Cloud Foundation featuring Paudie O’Riordan

The first episode of 2025 features one of my favorite colleagues, Paudie O’Riordan. Paudie works for the same team as I do, and although we’ve both roamed around a lot, somehow we always ended up either in the same team, or in very close proximity. Paudie is a storage guru, and the last years helped many customers with their VCF (or vSAN) proof of concept, and on top of that helped countless customers understand difficult failure scenarios in a stretched environment when things went south. In Episode 088 Paudie discusses the many dos and don’ts! This is an episode you need cannot miss out on!

What happened to the option “none – stretched cluster” in storage policies?

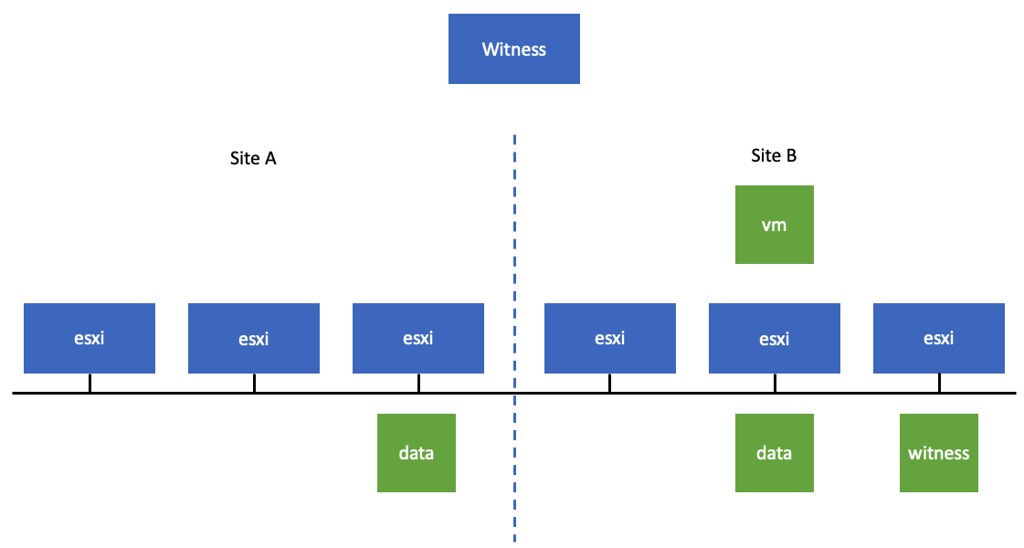

Starting with 8.0 Update 2 support for the option “None – Stretched Cluster” in your storage policy for a vSAN Stretched Cluster configuration has been removed. The reason for this is that it was leading to a lot of situations where unfortunately customers mistakenly had used this option and during failure realized that some VMs were not working anymore. The reason the VMs stopped working is because with this policy option objects all components of an object were placed within a single location, but there was no guarantee that all objects of a VM would reside in the same location. So you could for instance end up in the following situation shown below, where you have the VM running in Site B, with a data object stored in Site A and a data object stored in Site B, and on top of that the witness in Site B. This unfortunately for some customers resulted in strange behavior if there was an issue with the network between Site A and Site B.

Hopefully that explains why this option is no longer available.

vSAN Stretched: Why is the witness not part of the cluster when the link between a data site and the witness fails?

Last week I received a question about vSAN Stretched which had me wondering for a while what on earth was going on. The person who asked this question was running through several failure scenarios, some of which I have also documented in the past here. The question I got is what is supposed to happen when I have the following scenario as shown in the diagram and the link between the preferred site (Site A) and the witness fails:

The answer, at least that is what I thought, was simple: All VMs will remain running, or said differently, there’s no impact on vSAN. While doing the test, indeed the outcome I documented, which is also documented in the Stretched Clustering Guide and the PoC Guide was indeed the same, the VMs remain running. However, one of the things that was noticed is that when this situation occurs, and indeed the connection between Site A and the Witness is lost, the witness is somehow no longer part of the cluster, which is not what I would expect. The reason I would not expect this to happen is because if a second failure would occur, and for instance the ISL between Site A and Site B goes down, it would direclty impact all VMs. At least, that is what I assumed.

However, when I triggered that second failure and I disconnected the ISL between Site A and Site B, I saw the witness re-appearing again immidiately, I saw the witness objects going from “absent” to “active”, and more importantly, all VMs remained running. The reason this happens is fairly straight forward, when running a configuration like this vSAN has a “leader” and a “backup”, and they each run in a seperate fault domain. Both the leader and the backup need to be able to communicate with the Witness for it to be able to function correctly. If the connection between Site A and the Witness is gone, then either the leader or the backup can no longer communicate with the Witness and the Witness is taken out of the cluster.

So why does the Witness return for duty when the second failure is triggered? Well, when the second failure is triggered the leader is restarted in Site B (as Site A is deemed lost), and the backup is already running in Site B. As both the leader and the backup can communicate again with the witness, the witness returns for duty and so will all of the components automatically and instantly. Which means that even though the ISL has failed between Site A and B after the witness was taken out of the cluster, all VMs remain accessible as the witness is reintroduced instantly to ensure availability of the workload. Pretty cool! (Thanks to vSAN engineering for providing these insights on why this happens!)