At VMworld, various cool new technologies were previewed. In this series of articles, I will write about some of those previewed technologies. Unfortunately, I can’t cover them all as there are simply too many. This article is about HCI / vSAN futures, which was session HCI2733BU. For those who want to see the session, you can find it here. This session was presented by Srinivasan Murari and Vijay Ramachandran. Please note that this is a summary of a session which is discussing the roadmap of VMware’s HCI offering, these features may never be released, and this preview does not represent a commitment of any kind, and this feature (or it’s functionality) is subject to change. Now let’s dive into it, what is VMware planning for the future of HCI? Some of the features discussed during this session were also discussed last year, I wrote a summary here for those interested.

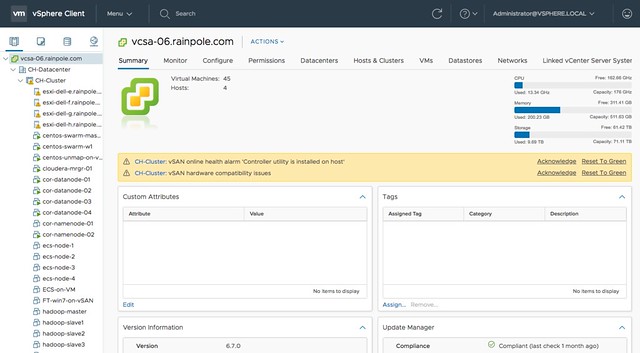

Vijay kicked off the session with an overview of the current state of HCI and more specifically VMware vSAN and Cloud Foundation. Some of the use cases were discussed, and it was clear that today the majority of VMware HCI solutions are running business-critical apps on top. More and more customers are looking to adopt full stack HCI as they need an end-to-end story that includes compute, networking, storage, security and business continuity for all applications running on top of it. As such VMware’s HCI solution has been focussed on lifecycle management and automation of all aspects of the SDDC. This is also the reason why VMware is currently the market leader in this space with over 20k customers and a market share of over 41%.

[Read more…] about VMworld Reveals: HCI Present and Futures (#HCI2733BU)