For those who want to start testing the beta of vSphere Virtual SAN in their lab with vSphere 5.5 I figured it would make sense to describe how I created my nested lab. (Do note that performance will be far from optimal) I am not going to describe how to install ESXi nested as there are a billion articles out there that describe how to do that.I suggest creating ESXi hosts with 3 disks each and a minimum of 5GB of memory per host:

- Disk 1 – 5GB

- Disk 2 – 20GB

- Disk 3 – 200GB

After you have installed ESXi and imported a vCenter Server Appliance (my preference for lab usage, so easy and fast to set up!) you add your ESXi hosts to your vCenter Server. Note to the vCenter Server NOT to a Cluster yet.

Login via SSH to each of your ESXi hosts and run the following commands:

- esxcli storage nmp satp rule add –satp VMW_SATP_LOCAL –device mpx.vmhba2:C0:T0:L0 –option “enable_local enable_ssd”

- esxcli storage nmp satp rule add –satp VMW_SATP_LOCAL –device mpx.vmhba3:C0:T0:L0 –option “enable_local”

- esxcli storage core claiming reclaim -d mpx.vmhba2:C0:T0:L0

- esxcli storage core claiming reclaim -d mpx.vmhba3:C0:T0:L0

These two commands ensure that the disks are seen as “local” disks by Virtual SAN and that the “20GB” disk is seen as an “SSD”, although it isn’t using an SSD. There is another option which might even be better, you can simply add a VMX setting to specify the disks are SSDs. Check William’s awesome blog post for the how to.

After running these two commands we will need to make sure the hosts are configured properly for Virtual SAN. First we will add them to our vCenter Server, but without adding them to a cluster! So just add them on a Datacenter level.

Now we will properly configure the host. We will need to create an additional VMkernel adapter, do this for each of the three hosts:

- Click on your host within the web client

- Click “Manage” -> “Networking” -> “VMkernel Adapters”

- Click the “Add host networking” icon

- Select “VMkernel Network Adapter”

- Select the correct vSwitch

- Provide an IP-Address and tick the “Virtual SAN” traffic tickbox!

- Next -> Next -> Finish

When this is configured for all three hosts, configure a cluster:

- Click your “Datacenter” object

- On the “Getting started” tab click “Create a cluster”

- Give the cluster a name and tick the “Turn On” tickbox for Virtual SAN

- Also enable HA and DRS if required

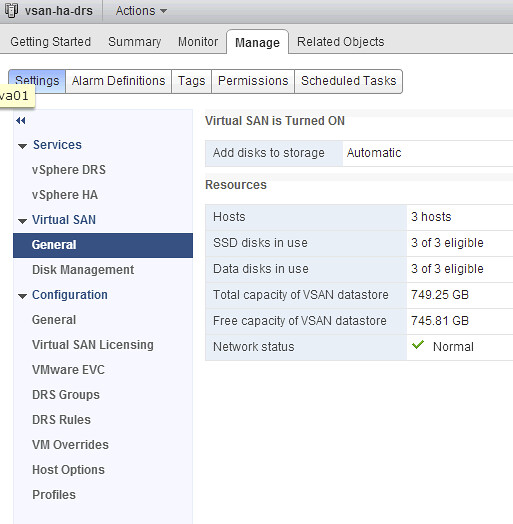

Now you should be able to move your hosts in to the cluster. With the Web Client for vSphere 5.5 you can simply drag and drop the hosts one by one in to the cluster. VSAN will now be automatically configured for these hosts… Nice right. When all configuration tasks are completed just click on your Cluster object and then “Manage” -> “Settings” -> “Virtual SAN”. Now you should see the amount of hosts part of the VSAN cluster, number of SSDs and number of data disks.

Now before you get started there is one thing you will need to do, and that is enable “VM Storage Policies” on your cluster / hosts. You can do this via the Web Client as follows:

- Click the “home” icon

- Click “VM Storage Policies”

- Click the little policy icon with the green checkmark, second from the left

- Select your cluster and click “Enable” and then close

Now note that you have enabled VM Storage Policies, there are no pre-defined policies. Yes there is a “default policy”, but you can only see that on the command line. For those interested just open up an SSH session and run the following command:

~ # esxcli vsan policy getdefault

Policy Class Policy Value

------------ --------------------------------------------------------

cluster (("hostFailuresToTolerate" i1) )

vdisk (("hostFailuresToTolerate" i1) )

vmnamespace (("hostFailuresToTolerate" i1) )

vmswap (("hostFailuresToTolerate" i1) ("forceProvisioning" i1))

~ #

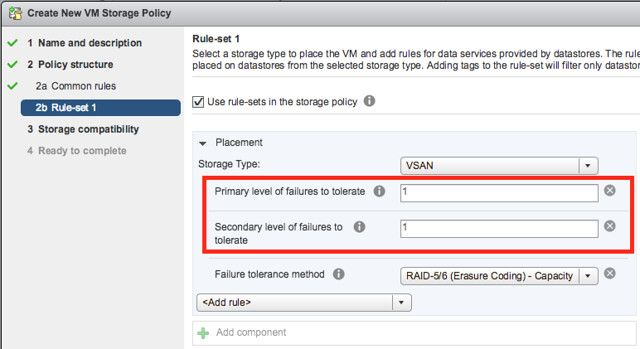

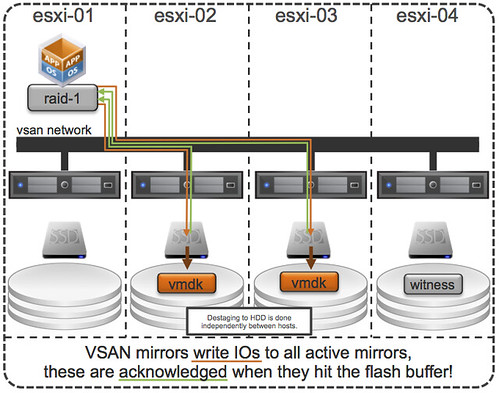

Now this means that in the case of “hostFailuresToTolerate”, Virtual SAN can tolerate a 1 host failure before you potentially lose data. In other words, in a 3 node cluster you will have 2 copies of your data and a witness. Now if you would like to have N+2 resilience instead of N+1 it is fairly straight forward. You do the following:

- Click the “home” icon

- Click “VM Storage Policies”

- Click the “New VM Storage Policy” icon

- Give it a name, I used “N+2 resiliency” and click “Next”

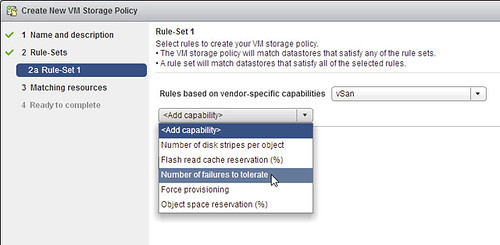

- Click “Next” on Rule-Sets and select a vendor, which will be “vSan”

- Now click <add capability> and select “Number of failures to tolerate” and set it to 2 and click “Next”

- Click “Next” -> “Finish”

That is it for creating a new profile. Of course you can make these as complex as you want, their are various other options like “Number of disk stripes” and “Flash read cache reservation %”. For now I wouldn’t recommend tweaking these too much unless you absolutely understand the impact of changing these.

In order to use the profile you will go to an existing virtual machine and you right click it and do the following:

- Click “All vCenter Actions”

- Click “VM Storage Service Policies”

- Click “Manage VM Storage Policies”

- Select the appropriate policy on “Home VM Storage Policy” and do not forget to hit the “Apply to disks” button

- Click OK

Now the new policy will be applied to your virtual machine and its disk objects! Also while deploying a new virtual machine you can in the provisioning workflow immediately select the correct policy so that it is deployed in a correct fashion.

These are some of the basics for testing VSAN in a virtual environment… now register and get ready to play!

This seems to becoming a true series, introducing startups… Now in the case of

This seems to becoming a true series, introducing startups… Now in the case of