Over a year ago I wrote an article (multiple actually) about Software Defined Storage, VSAs and different types of solutions and how flash impacts the world. One of the articles contained a diagram and I would like to pull that up for this article. The diagram below is what I used to explain how I see a potential software defined storage solution. Of course I am severely biased as a VMware employee, and I fully understand there are various scenarios here.

As I explained the type of storage connected to this layer could be anything DAS/NFS/iSCSI/Block who cares… The key thing here is that there is a platform sitting in between your storage devices and your workloads. All your storage resources would be aggregated in to a large pool and the layer should sort things out for you based on the policies defined for the workloads running there. Now I drew this layer coupled with the “hypervisor”, but thats just because that is the world I live in.

Looking back at this article and looking at the state of the industry today, a couple of things stood out. First and foremost, the term “Software Defined Storage” has been abused by everyone and doesn’t mean much to me personally anymore. If someone says during a bloggers briefing “we have a software defined storage solution” I typically will ask them to define it, or explain what it means to them. Anyway, why did I show that diagram, well mainly because I realised over the last couple of weeks that a couple of companies/products are heading down this path.

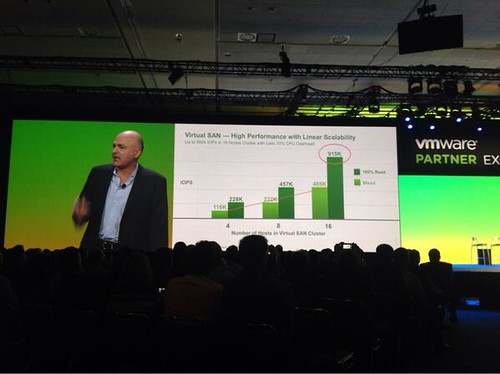

If you look at the diagram and for instance think about VMware’s own Virtual SAN product than you can see what would be possible. I would even argue that technically a lot of it would be possible today, however the product is also lacking in some of these spaces (data services) but I expect this to be a matter of time. Virtual SAN sits right in the middle of the hypervisor, the API and Policy Engine is provided by the vSphere layer, it has its own caching service… For now it isn’t supported to connect SAN storage, but if I want to I could even today simply by tagging “LUNs” as local disks.

Another product which comes to mind when looking at the diagram is Pernix Data’s FVP. Pernix managed to build a framework that sits in the hypervisor, in the data path of the VMs. They provide a highly resilient caching layer, and will be able do both flash as well as memory caching in the near future. They support different types of storage connected with the upcoming release… If you ask me, they should be in the right position to slap additional data services like deduplication / compression / encryption / replication on top of it. I am just speculating here, and I don’t know the PernixData roadmap so who knows…

Something completely different is EMC’s ViPR (read Chad’s excellent post on ViPR) and although they may not entirely fit the picture I drew today they are aiming to be that layer in between you and your storage devices and abstract it all for you and allow for a single API to ease automation and do this “end to end” including the storage networks in between. If they would extend this to allow for certain data services to sit in a different layer then they would pretty much be there.

Last but not least Atlantis USX. Although Atlantis is a virtual appliance and as such a different implementation than Virtual San and FVP, they did manage to build a platform that basically does everything I mentioned in my original article. One thing it doesn’t directly solve is the management of the physical storage devices, but today neither does FVP or Virtual SAN (well to a certain extend VSAN does…) But I am confident that this will change when Virtual Volumes is introduced as Atlantis should be able to leverage Virtual Volumes for those purposes.

Some may say, well what about VMware’s Virsto? Indeed, Virsto would also fit the picture but the end of availability was announced not too long ago. However, it has been hinted at multiple times that Virsto technology will be integrated in to other products over time.

Although by now “Software Defined Storage” is seen as a marketing bingo buzzword the world of storage is definitely changing. The question now is I guess, are you ready to change as well?

Yeah that title got your attention right… For now it is just me writing about it and nothing has been announced or promised. At VMworld I believe it was Intel who demonstrated the possibilities in this space, an All Flash Virtual SAN. A couple of weeks back during my holiday someone pointed me to a couple of articles which were around SSD endurance. Typically these types of articles deal with the upper-end of the spectrum and as such are irrelevant to most of us, and some of the articles I have read in the past around endurance were disappointing to be honest.

Yeah that title got your attention right… For now it is just me writing about it and nothing has been announced or promised. At VMworld I believe it was Intel who demonstrated the possibilities in this space, an All Flash Virtual SAN. A couple of weeks back during my holiday someone pointed me to a couple of articles which were around SSD endurance. Typically these types of articles deal with the upper-end of the spectrum and as such are irrelevant to most of us, and some of the articles I have read in the past around endurance were disappointing to be honest.