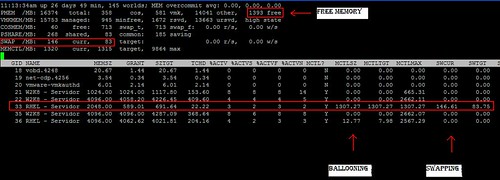

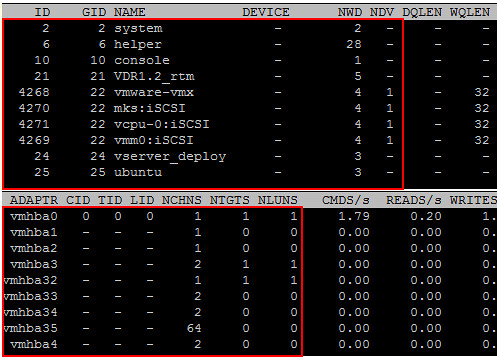

Not sure why hardly anyone picked up on this cool youtube movie about Storage IO Control(SIOC), but I figured it was worth posting. SIOC is probably one of the coolest version coming to a vSphere version in the near future. Scott Drummonds wrote a cool article about it which shows the strength of SIOC when it comes to fairness. One might say that there already is a tool to do it and that’s called per VM disk shares, well that’s not entirely true… The following diagrams depict the current situation(without…) and the future(with…) :

As the diagrams clearly shows, the current version of shares are on a per Host basis. When a single VM on a host floods your storage all other VMs on the datastore will be effected. Those who are running on the same host could easily, by using shares, carve up the bandwidth. However if that VM which causes the load would move a different host the shares would be useless. With SIOC the fairness mechanism that was introduced goes one level up. That means that on a cluster level Disk shares will be taken into account.

There are a couple of things to keep in mind though:

- SIOC is enabled per datastore

- SIOC only applies disk shares when a certain threshold(Device Latency, most likely 30ms) has been reached.

- The latency value will be configurable but it is not recommended for now

- SIOC carves out the array queue, this enables a faster response for VMs doing for instance sequential IOs

- SIOC will enforce limits in terms of IOPS when specified on the VM level

- No reservation setting for now…

Anyway, enough random ramblings… here’s the movie. Watch it!

For those with a VMworld account I can recommend watching TA3461.