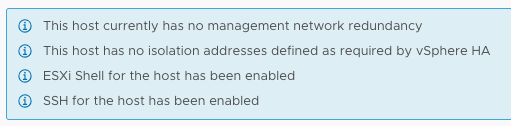

I had a comment on one of my 2-node vSAN cluster articles that there was an issue with HA when disabling the Isolation Response. The isolation response is not required for 2-node as it is impossible to properly detect an isolation event and vSAN has a mechanism to do exactly what the Isolation Response does: kill the VMs when they are useless. The error witnessed was “This host has no isolation addresses defined as required by vSphere HA” as shown also in the screenshot below.

So now what? Well first of all, as mentioned in the comments section as well, vSphere always checks if an isolation address is specified, that could be the default gateway of the management network or it could be the isolation address that you specified through advanced setting das.isolationaddress. When you use das.isolationaddress it often goes hand in hand with das.usedefaultisolationaddress set to false. That last setting, das.usedefaultisolationaddress, is what causes the error above to be triggered. What you should do in a 2-node configuration is the following:

- Do not configure the isolation response, explanation to be found in the above-mentioned article

- Do not configure das.usedefaultisolationaddress, if it is configured set it to true

- Make sure you have a gateway on the management vmkernel, if that is not the case you could set das.isolationaddress and simply set it to 127.0.0.1 to prevent the error from popping up.

Hope this helps those hitting this error message.