In the just released vSphere 5.0 Update 1 some welcome enhancements were added around vSphere HA and how a Permanent Device Loss (PDL) condition is handled. The PDL condition is a condition that is communicated by the array to ESXi via a SCSI sense code and indicates that a device (LUN) is unavailable and more than likely permanently unavailable. This is a condition which is useful for “stretched storage cluster” configurations where in the case of a failure in Datacenter-A the configuration in Datacenter-B can take over. An example of when a condition like this would be communicated by the array would be when a LUN “detached” in a site isolation. PDL is probably most common in non-uniform stretched solutions like EMC VPLEX. With VPLEX site affinity is defined per LUN. If your VM resides in Datacenter-A while the LUN it is stored on has affinity to Datacenter-B in case of failure this VM could lose access to the LUN. These enhancements will ensure the VM is killed and restarted on the other side.

Please note that action will only be taken when a PDL sense code is issued. When your storage completely fails for instance it is impossible to reach the PDL condition as there is no communication possible anymore from the array to the ESXi host and the state will be identified by the ESXi host as an All Paths Down (APD) condition. APD is a more common scenario in most environments. If you are testing these enhancements please check the log files to validate which problem has been identified.

With vSphere 5.0 and prior HA did not have a response in a PDL condition, meaning that when a virtual machine was residing on a datastore which had a PDL condition the virtual machine would just sit there. This virtual machine would be unable to read or write from disk however. As of vSphere 5.0 Update 1 a new mechanism has been introduced which allows vSphere HA to take action when a datastore has reached a PDL state. Two advanced settings make this possible. The first setting is configured on a host level and is “disk.terminateVMOnPDLDefault”. This setting can be configured in /etc/vmware/settings and should be set to “True”. This setting ensures that a virtual machine is killed when the datastore it resides on is in a PDL state. The virtual machine is killed as soon as it initiates disk I/O on a datastore which is in a PDL condition and all of the virtual machine files reside on this datastore. Note that if a virtual machine does not initiate any I/O it will not be killed!

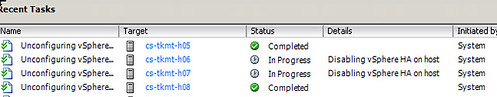

The second setting is a vSphere HA advanced setting called das.maskCleanShutdownEnabled. This setting is also not enabled by default and it will need to be set to “True”. This settings allows HA to trigger a restart response for a virtual machine which has been killed automatically due to a PDL condition. This setting allows HA to differentiate between a virtual machine which was killed due to the PDL state or a virtual machine which has been powered off by an administrator.

As soon as “disaster strikes” and the PDL sense code is sent you will see the following popping up in the vmkernel.log that indicates the PDL condition and the kill of the VM:

2012-03-14T13:39:25.085Z cpu7:4499)WARNING: VSCSI: 4055: handle 8198(vscsi4:0):opened by wid 4499 (vmm0:fri-iscsi-02) has Permanent Device Loss. Killing world group leader 4491

2012-03-14T13:39:25.085Z cpu7:4499)WARNING: World: vm 4491: 3173: VMMWorld group leader = 4499, members = 1

As mentioned earlier, this is a welcome enhancement which especially in non-uniform stretched storage environment can help in specific failure scenarios.