Just a second ago the GSS/KB team republished the KB article that explain the vSphere 5.0 problem around SvMotion / vDS / HA. I wrote about this problem various times and would like to refer to that for more details. What I want to point out here though is that the KB article now has a script attached which will help preventing problems until a full fixed is released. This script is basically the script that William Lam wrote, but it has been fully tested and vetted by VMware. For those running vSphere 5.0 and using SvMotion on VMs attached to distributed switches I urge you to use the script. I expect that the PowerCLI version will also be attached soon.

ha

With vSphere 5.0 and HA can I share datastores across clusters?

I have had this question multiple times by now so I figured I would write a short blog post about it. The question is if you can share datastores across clusters with vSphere 5.0 and HA enabled. This question comes from the fact that HA has a new feature called “datastore heartbeating” and uses the datastore as a communication mechanism.

The answer is short and sweet: Yes.

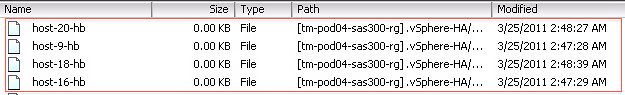

For each cluster a folder is created. The folder structure is as follows:

/<root of datastore>/.vSphere-HA/<cluster-specific-directory>/

The “cluster specific directory” is based on the uuid of the vCenter Server, the MoID of the cluster, a random 8 char string and the name of the host running vCenter Server. So even if you use dozens of vCenter Servers there is no need to worry.

Each folder contains the files HA needs/uses as shown in the screenshot below. So no need to worry around sharing of datastores across clusters. Frank also wrote an article about this from a Storage DRS perspective. Make sure you read it!

PS: all these details can be found in our Clustering Deepdive book… find it on Amazon.

What is das.maskCleanShutdownEnabled about?

I had a question today around what the vSphere HA option advanced setting das.maskCleanShutdownEnabled is about. I described why it was introduced for Stretched Clusters but will give a short summary here:

Two advanced settings have been introduced in vSphere 5.0 Update 1 to enable HA to fail-over virtual machines which are located on datastores which are in a Permanent Device Loss state. This is very specific to stretchec cluster environments. The first setting is configured on a host level and is “disk.terminateVMOnPDLDefault”. This setting can be configured in /etc/vmware/settings and should be set to “True”. This setting ensures that a virtual machine is killed when the datastore it resides on is in a PDL state.

The second setting is a vSphere HA advanced setting called “das.maskCleanShutdownEnabled“. This setting is also not enabled by default and it will need to be set to “True”. This settings allows HA to trigger a restart response for a virtual machine which has been killed automatically due to a PDL condition. This setting allows HA to differentiate between a virtual machine which was killed due to the PDL state or a virtual machine which has been powered off by an administrator.

But why is “das.maskCleanShutdownEnabled” needed for HA? From a vSphere HA perspective there are two different types of “operations”. The first is a user initiated power-off (clean) and the other is a kill. When a virtual machine is powered off by a user, part of the process is setting the property “runtime.cleanPowerOff” to true.

Remember that when “disk.terminateVMOnPDLDefault” is configured your VMs will be killed when they issue I/O. This is where the problem arises, in a PDL scenario it is impossible to set “runtime.cleanPowerOff” as the datastore, and as such the vmx, is unreachable. As the property defaults to “true” vSphere HA will assume the VMs were cleanly powered off. This would result in vSphere HA not taking any action in a PDL scenario. By setting “das.maskCleanShutdownEnabled” to true, a scenario where all VMs are killed but never restarted can be avoided as you are telling vSphere HA to assume that all VMs are not shutdown in a cleanly matter. In that case vSphere HA will assume VMs are killed UNLESS the property is set.

If you have a stretched cluster environment, make sure to configure these settings accordingly!

Scripts release for Storage vMotion / HA problem

Last week when the Storage vMotion / HA problem went public I asked both William Lam and Alan Renouf if they could write a script to detect the problem. I want to thank both of them for their quick response and turnaround, they cranked the script out in literally hours. The scripts were validated multiple times in a VDS environment and worked flawless. Note that these scripts can detect the problem in an environment using a regular Distributed vSwitch and a Nexus 1000v, the script can only mitigate the problem though in a Distributed vSwitch environment. Here are the links to the scripts:

- Perl: Identifying & Fixing Virtual Machines Affected By SvMotion / VDS Issue (William Lam)

- PowerCLI – Identifying and fixing VMs Affected By SvMotion / VDS Issue (Alan Renouf)

Once again thanks guys!

Limiting stress on storage caused by HA restarts by lowering restart concurrency?

I had a question last week, and it had me going for a while. The question was if “das.perHostConcurrentFailoversLimit” could be used to lower the hit on storage during a boot storm. By default this advanced option is set to 32. Meaning that a max of 32 VMs will be restarted by HA on a single host. The question was if lowering this value to for instance 16 would help reducing the stress on storage when multiple hosts would fail, or for instance in a blade environment when a chassis would fail.

At first you would probably say “Yes of course it will”. Having only 16 restarts concurrently vs 32 should cut the stress in half… Well not exactly. The point here is that this setting is:

- A per host setting and not cluster wide

- Addressing power on attempts

So what is the problem with that exactly? Well in the case of the per host setting, if you have a 32 node cluster and 8 would fail, there would still be a max of 384 VMs power on attempts concurrently. (32 – 8 failed host) * 16 VMs max restart per host. Yes it is a lot better than 768, but still a lot of VMs hitting your storage.

But more importantly, we are talking power-on attempts here! A power-on attempt does not equal the boot process of the virtual machine! It is just the initial process that flips the switch of the VM from “off” to “on”, check vCenter when you power on a VM, you will see the task as completed during the boot process of your VM. Reducing this number will reduce the stress hostd, but that is about it. In other words, if you lower it to 16 you will have less power-on attempts concurrently, but they will be handled fast by HOSTD and before you know it 16 new power-on attempts will be done, and near simultaneous!

The only way you can really limit the hit on storage and virtual machines sharing this storage would be by enabling Storage IO Control. SIOC will ensure that all VMs who are in need of storage resources will get it in a fair manner. The other option is to ensure that you are not overloading your datastores with a massive amount of VMs and not the IOPS to back the boot storm process up. I guess there is no real need to be overly concerned here though… How often does it happen that 50% of your environment fails? If it does, are you worried about that 15 minute performance hit, or worried about those 50% of the VMs being down?