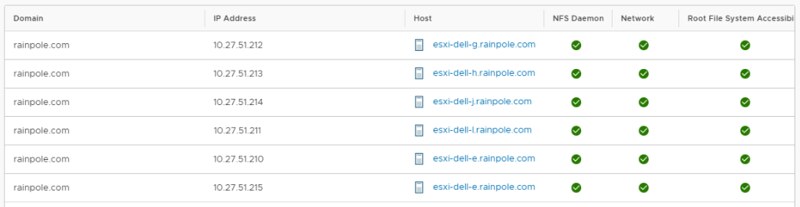

Today I was looking at vSAN File Services a bit more and I had some challenges figuring out the details on the objects associated with a File Share. Somehow I had never noticed this, but fortunately, Cormac pointed it out. In the Virtual Objects section of the UI you have the ability to filter, and it now includes the option to filter for objects associated to File Shares and to Persistent Volumes for containers as well. If you click on the different categories in the top right you will only see those specific objects, which is what the screenshot below points out.

Something really simple, but useful to know. I created a quick youtube video going over it for those who prefer to see it “in action”. Note that at the end of the demo I also show how you can inspect the object using RVC, although it is not a tool I would recommend for most users, it is interesting to see that RVC does identify the object as “VDFS”.