I had a question last week about the need for DRS Rules or also known as VM/Host Rules in an All-Flash Stretched vSAN Infrastructure. In a vSAN Stretched Cluster, there is read locality implemented. The read locality functionality, in this case, ensures that: reads will always come from the local fault domain.

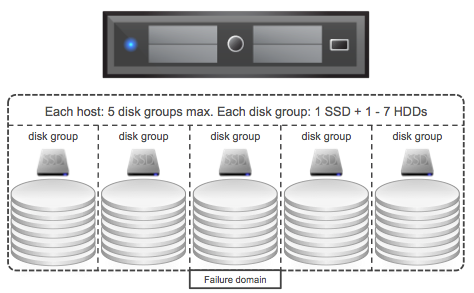

This means that in the case of a stretched environment, the reads will not have to traverse the network. As the maximum latency is 5ms, this avoids a potential performance hit of 5ms for reads. The additional benefit is also that in a hybrid configuration we avoid needing to re-warm the cache. For the (read) cache re-warming issue we recommend our customers to implement VM/Host Rules. These rules ensure that VMs always run on the same set of hosts, in a normal healthy situation. (This is explained on storagehub here.)

What about an All-Flash cluster, do you still need to implement these rules? The answer to that is: it depends. You don’t need to implement it for “read cache” issues, as in an all-flash cluster there is no read cache. Could you run without those rules? Yes, you can, but if you have DRS enabled this also means that DRS freely moves VMs around, potentially every 5 minutes. This also means that you will have vMotion traffic consuming the inter-site links, and considering how resource hungry vMotion can be, you need to ask yourself if cross-site load balancing adds anything, what the risk is, what the reward is? Personally, I would prefer to load balance within a site, and only go across the link when doing site maintenance, but you may have a different view or set of requirements. If so, then it is good to know that vSAN and vSphere support this.

For the last 12 months people have been saying that all-flash and hybrid configurations are getting really close in terms of pricing. During the many conversations I have had with customers it became clear that this is not always the case when they requested quotes from server vendors and I wondered why. I figured I would go through the exercise myself to see how close we actually are and to what we are getting close. I want to end this discussion once and for all, and hopefully convince all of you to get rid of that spinning rust from your VSAN configurations, especially those who are now at the point of making their design.

For the last 12 months people have been saying that all-flash and hybrid configurations are getting really close in terms of pricing. During the many conversations I have had with customers it became clear that this is not always the case when they requested quotes from server vendors and I wondered why. I figured I would go through the exercise myself to see how close we actually are and to what we are getting close. I want to end this discussion once and for all, and hopefully convince all of you to get rid of that spinning rust from your VSAN configurations, especially those who are now at the point of making their design.