For those not monitoring the VMware website like a hawk… VMware just released vCenter 5.5 Update 1b. This update contains a couple of fixes which are critical in my opinion. So make sure to upgrade vCenter as quickly as possible:

- Update to OpenSSL library addresses security issues

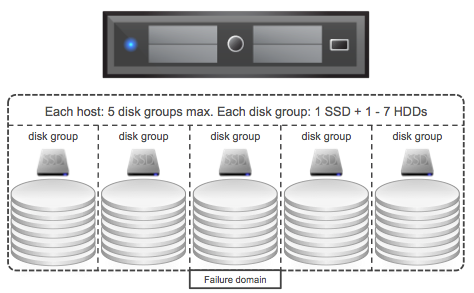

OpenSSL libraries have been updated to versions openssl-0.9.8za, openssl-1.0.0m, and openssl-1.0.1h to address CVE-2014-0224. - Under certain conditions, Virtual SAN storage providers might not be created automatically after you enable Virtual SAN on a cluster

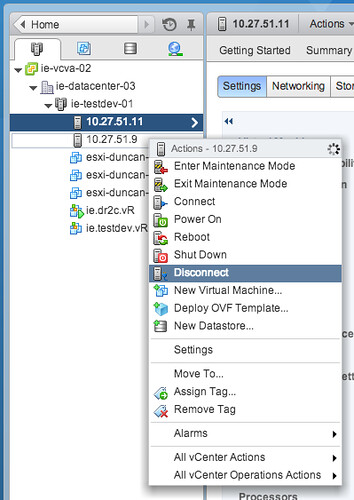

When you enable Virtual SAN on a cluster, Virtual SAN might fail to automatically configure and register storage providers for the hosts in the cluster, even after you perform a resynchronization operation. This issue is resolved in this release. You can view the Virtual SAN storage providers after resynchronization. To resynchronize, click the synchronize icon in the Storage Providers tab.

You can download the bits here.