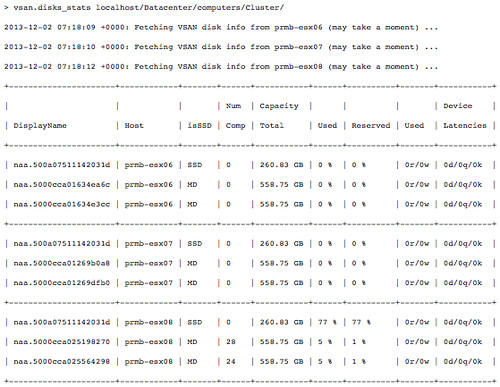

The question that keeps coming up over and over again at VMUG events, on my blog and the various forums is: What happens in a VSAN cluster in the case of an SSD failure? I answered the question in one of my blog posts around failure scenarios a while back, but figured I would write it down in a separate post considering people keep asking for it. It makes it a bit easier to point people to the answer and also makes it a bit easier to find the answer on google. Lets sketch a situation first, what does (or will) the average VSAN environment look like:

In this case what you are looking at is:

- 4 host cluster

- Each host with 1 disk group

- Each disk group has 1 SSD and 3 HDDs

- Virtual machine running with a “failures to tolerate” of 1

As you hopefully know by now a VSAN Disk Group can hold 7 HDDs and requires an SSD on top of that. The SSD is used as a Read Cache (70%) and a Write Buffer (30%) for the components stored on it. The SSD is literally the first location IO is stored; as depicted in the diagram above. So what happens when the SSD fails?

When the SSD fails the whole Disk Group and all of the components will be reported as degraded or absent. The state (degraded vs absent) will depend on the type of failure, typically though when an SSD fails VSAN will recognize this and mark it as degraded and as such instantly create new copies of your objects (disks, vmx files etc) as depicted in the diagram above.

From a design perspective it is good to realize the following (for the current release):

- A disk group can only hold 1 SSD

- A disk group can be seen as a failure domain

- E.g. as such there could be a benefit in creating 2 x 3HDD+1SSD versus 6HDD+1SSD diskgroup

- SSD availability is critical, select a reliable SSD! Yes some consumer grade SSDs do deliver a great performance, but they typically also burn out fast.

Let is be clear that if you run with the default storage policies you are protecting yourself against 1 component failure. This means that 1 SSD can fail or 1 host can fail or 1 disk group can fail, without loss of data and as mentioned typically VSAN will quickly recreate the impacted objects on top of that.

Doesn mean you should try safe money on reliability if you ask me. If you are wondering which SSD to select for your VSAN environment I recommend reading this post by Wade Holmes on the VMware vSphere Blog. Especially take note of the Endurance Requirements section! If I had to give a recommendation though, the Intel S3700 seems to still be the sweet spot when it comes to price / endurance / performance!