This week I received an email from one of my readers about some weird Storage IO Control behavior in their environment. On a regular basis he would receive an error stating that an “external I/O workload has been detected on shared datastore running Storage I/O Control (SIOC) for congestion management”. He did a quick scan of his complete environment and couldn’t find any hosts connecting to those volumes. After exchanging a couple of emails about the environment I managed to figure out what triggered this alert.

Now this all sounds very logical but probably is one of the most common made mistakes… sharing spindles. Some storage platforms carve out a volume from a specific set of spindles. This means that these spindles are solely dedicated to that particular volume. Other storage platforms however group spindles and layer volumes across these. Simply said, they are sharing spindles to increase performance. NetApp’s “aggregates” and HP’s “disk groups” would be a good example.

This can and probably will cause the alarm to be triggered as essentially an unknown workload is impacting your datastore performance. If you are designing your environment from the ground-up, make sure that all spindles that are backing your VMFS volumes have SIOC enabled.

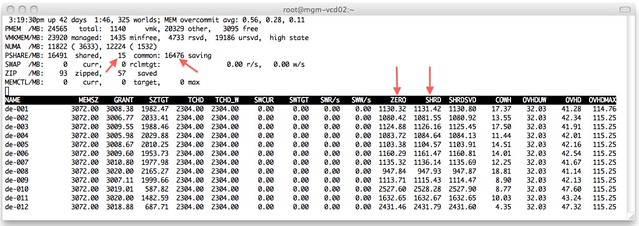

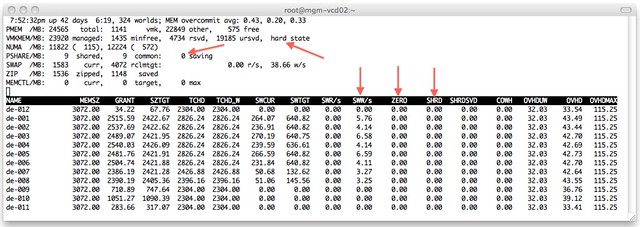

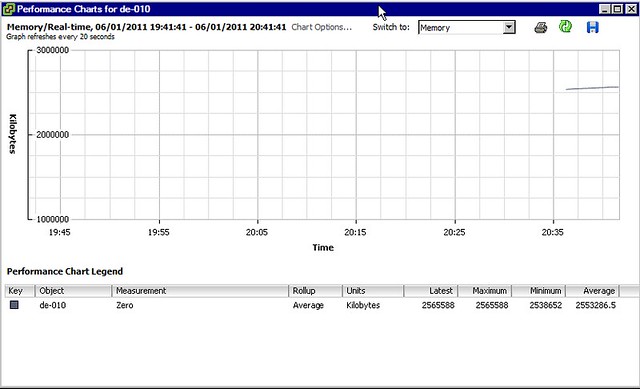

However, in an existing environment this will be difficult, don’t worry that SIOC will be overly conservative and unnecessarily throttle your virtual workload. If and when SIOC detects an external workload it will stop throttling the virtual workload to avoid giving the external more bandwidth while negatively impact the virtual workload. From a throttling perspective that will look as follows:

32 29 28 27 25 24 22 20 (detect nonVI –> Max Qdepth )

32 31 29 28 26 25 (detect nonVI –> Max Qdepth)

32 30 29 27 25 24 (detect nonVI –> Max Qdepth)

…..

Please note that the above example depicts a scenario where SIOC notices that the latency threshold is still exceeded and the cycle will start again, SIOC checks latency values every 4 seconds. The question of course remains how SIOC knows that there is an external workload accessing the datastore. SIOC uses a what we call a “self-learning algorithm”. It keeps track of historical observed latency, outstanding IOs and window sizes. Based on that info it can identify anomalies and that is what triggers the alarm.

To summarize:

- Enable SIOC on all datastores that are backed by the same set of spindles

- If you are designing a green field implementation try to avoid sharing spindles between non VMware and VMware workloads

More details about when this event could be triggered can be found in this KB article.