As some of you might now I am not only doing a session at VMworld 2010 but I am also a Lab Captain. We have been working really hard over the last couple of months to get the labs up and running for you guys.

Over the last three days it has been chaos here at VMworld. Setting up, testing and stress testing labs and of course some last minute changes to make sure all of you guys have a great experience.

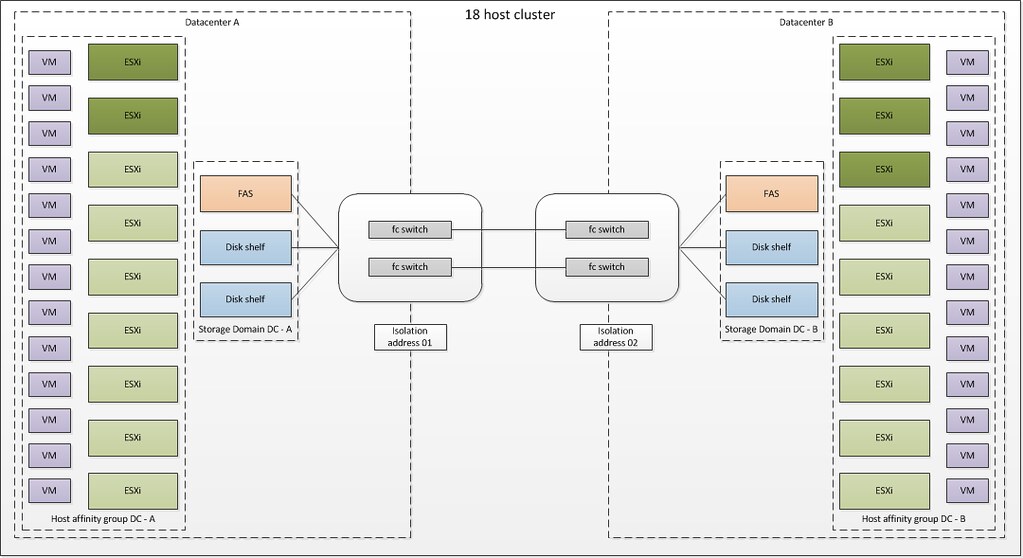

I must say, looking at the lab environment it has been worth it. We are not talking about a couple of labs here. No we are talking 480 seats and about 30 different labs ranging from “VMware ESXi Remote Management Utilities” to “Intro to Zimbra Colloaboration Suite” and even products which will be formally announced tomorrow.

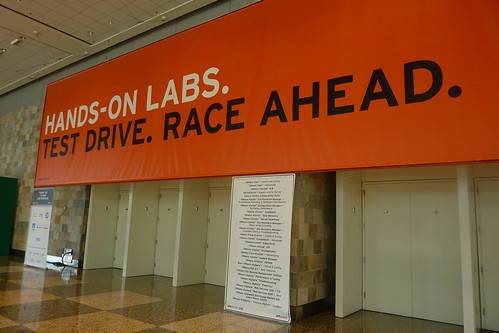

I took a couple of pictures this morning of the labs just to get you guys as excited as we are:

We all hope you will enjoy the Labs at VMworld 2010, but looking at the content and the set-up I am confident you will! Enjoy,