I’ve had this question twice in about a week, which means that it is time to write a quick post. How do you stop vCLS VMs from running on a vSphere HA Failover Host? For those who don’t know, a vSphere HA Failover Host is a host which is used when a failure has occurred and vSphere HA needs to restart VMs. In some cases customers (but usually Cloud partners) want to prevent the use of these hosts by any workload as it could impact the cost of usage of the platform.

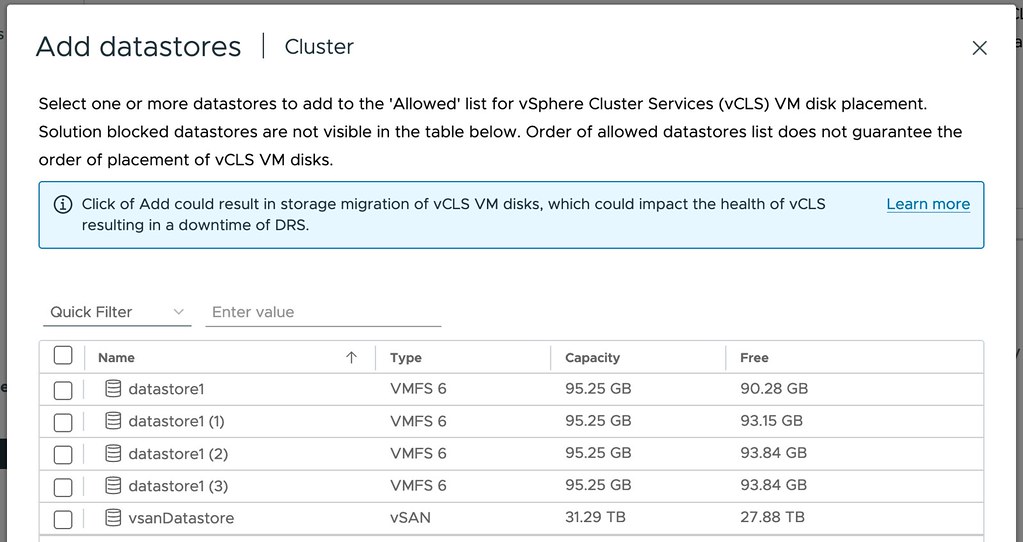

Unfortunately within the UI you cannot specify that vCLS VMs cannot run on specific hosts, you can limit the vCLS VMs from running next to other VMs, but not hosts. There is however an option to specify which datastores the VMs can be stored on, and this is a potential way of limiting which hosts the VMs can run on as well. How? Well if you create a datastore that is not presented to the designated vSphere HA Failover Host then the vCLS VM cannot run on that host as the host cannot access the datastore. It is a workaround for the problem, you can find out more about the datastore placement mechanism for vCLS in this document here. Do note, as stated, those vCLS VMs won’t be able to run on those hosts, so if the rest of the cluster fails and only the Failover Host is left the vCLS VMs will not be powered on. This means that DRS will not function while those VMs are unavailable.

I’ve contacted vSphere HA/vCLS product management to see if we can get this fixed somehow more elegantly in the product, and it is being worked on.

Hello Duncan,

thank you fot post.

What do you think about followings:

1. Cluster Services is started and initial placement of vCLS VMs is done.

2. I will check for “correct placement”, if the vCLS VM is placed on dedicated fail-over host, the host will be set to maintenance mode.

3. The vCLS VM will be moved to another host.

Assumptions:

– Checking for “correct placement” is running on regular basis -> amount of time of incorrect placement is marginal.

– vCLS VMs placement is static and move actions are initiated only (or mostly) by host accessibility.

Regards

miro

yeah the problem is that if you place the host into maintenance mode that indeed the VM will move away, but it may also move back over time. Which means you would need to constantly monitor the placement of the VMs. Sure it is a solution, but not what I personally would prefer.

Thank you

Maybe?:

Create a new datastore, just for the vCLS appliances.

Override vCLS placement to only use this new datastore.

Mount the datastore only on hosts where you are willing to allow vCLS to run.

that is indeed what my post suggested 🙂

I think the control of vCLS placement is at best incomplete, but potentially ill thought out.

In a data centre it would be human nature to power up the servers like reading a book, top left, row by row, down to bottom right, then cabinet by cabinet.

This results in a bias of vCLS placement, that cause them to be clumped together, so that they are in the same room, power zone, cabinet, and even chassis, if blades are being used. This results in plausible situations where all vCLS could be knocked out all together in fairly simple failure scenarios, like a power feed failure.

This means that HA recovery can start up under the original HA agent functionality in ESXi until a vCLS is recovered within the cluster. In vSphere 7, the HA agents are no longer affected by changes in advanced settings for affinity rule behaviours resulting in rules intended to be “MUST” rules being treated as soft rules.

This is can result in VMs running in undesired locations, and in very busy environments with required tight resource allocations this can mean they get stuck there. I.e. there are not enough empty spaces in the sliding squares puzzle for DRS to sort the mess out. Where DRS can sort it out, it means VMs get disrupted more than necessary, rather than if the VM had started in the right place to start with. Which, would of course have required vCLS to be resilient to the initial failure.

To that end I would like to see efforts put into a mechanism that would allow us to define host groups to act like fault domains, and for DRS to ensure that no more than 1 vCLS is running in a domain, unless it can’t do so.

So for example: where a cluster is stretched between two computer rooms on separate power zones. With at least two cabinets of hosts in each room, I would like to define groups for each cabinet, and set a rule that no more than 1 vCLS should be any cabinet group, and groups for each room, and have a rule that says at least one vCLS should be in each room group.

Failing that, I would accept the ability to run an arbitrary number of vCLS, and simply have them run on every host.

Agreed, it is far from perfect. Efforts are under way to solve (some) of these issues. I cannot share them on a public blog, but rest assured that I will share your feedback directly with the HA/DRS product manager.

Awesome, I look forward to seeing how things develop.

I’m following this thread and taking it all in. Some changes are coming and I wish I could share them right now.

I’m the DRS/HA PM Duncan is referencing.

Thank you Mike, keep us posted when you can.

Nice topic, this dedicated host configuration raise a question in my head ! Do service provider need to report CPUcount/licence that are used as a dedicated failover host without any running vm ? And what about VSAN does those host still participate on the vsan cluster capacity ?

Not sure about how it exactly works from a licensing perspective. I think so that all hosts which have VMs running should be licensed, but I am not 100% sure as I don’t do anything with licensing.

Hi Duncan, I wanted to expand on this issue, with regards to the VCSP licensing program with VCF Cores. Im trying to find out (I honestly think it hasnt been considered yet), if the vCLS VM’s running on a host, will be considered to be an active VM by vCloud Usage Meter, and hence the host cores will be billed for the month, even if there is no workload.