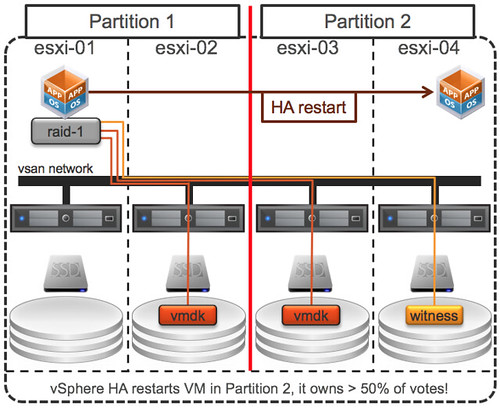

I had this question this week around what happens to VMs when a cluster is partitioned. Funny thing is that with questions like these it seems like everyone is thinking the same thing at the same time. I had the question on the same day from a customer running traditional storage and had a network failure across racks and from a customer running Virtual SAN who just wanted to know how this situation was handled. The question boils down to this, what happens to the VM in “Partition 1” when the VM is restarted in Partition 2?

The same can be asked for a traditional environment, only difference being that you wouldn’t see those “disk groups” in the bottom but a single datastore. In that case a VM can be restarted when a disk lock is lost… What happens to the VM in partition 1 that has lost access to its disk? Does the isolation response kick in? Well if you have vSphere 6.0 then potentially VMCP can help because if you have a single datastore and you’ve lost access to it (APD) then the APD response can be triggered. But if you don’t have vSphere 6.0 or don’t have VMCP configured, or if you have VSAN, what would happen? Well first of all, it is a partition scenario and not an isolation scenario. On both sides of the partition HA will have a master and hosts will be able to ping each other so there is absolutely no reason to invoke the “isolation response” as far as HA is concerned. The VM will be restarted in partition 2 and you will have it running in Partition 1, you will either need to kill it manually in Partition 1, or you will need to wait until the partition is lifted. When the partition is lifted the kernel will realize it no longer holds the lock (as it is lost it to another host) and it will kill the impacted VMs instantly.

What happens with the two HA masters in vSphere 6 when network partition is lifted? New election process or will one master , e.g. the one with highest moid, keep the master role?

Thanks

As described in the book, one with the highest identifier will keep the master role 🙂

Thanks, just didn’t know if it was same with vSphere 6 as with 5.x

Is there a way to override this before the partition was fixed, or is thre logic to prevent the vm which recieved prodtion traffic if the other did none from being terminated? It appears that from this 1000 mile overview that if the cluster got partitioned and the old vm was still recieving traffic that the could be a possible issue.

yes that could be possible indeed. if both VMs are running it could happen that both receive network traffic. you will have to terminate it manually. no other way of doing it right now.