Before we dive in to it, lets spell out the actual name of the feature “Swap to host cache”. Remember that, swap to host cache!

I’ve seen multiple people mentioning this feature and saw William posting a hack on how to fool vSphere (feature is part of vSphere 5 to be clear) into thinking it has access to SSD disks while this might not be the case. One thing I noticed is that there seems to be a misunderstanding of what this swap to host cache actually is / does and that is probably due to the fact that some tend to call it swap to SSD. Yes it is true, ultimately your VM would be swapping to SSD but it is not just a swap file on SSD or better said it is NOT a regular virtual machine swap file on SSD.

When I logged in to my environment first thing I noticed was that my SSD backed datastore was not tagged as SSD. First thing I wanted to do was tag it as SSD, as mentioned William already described this in his article and it is well documented in our own documentation as well so I followed it. This is what I did to get it working:

- Check the NAA ID in the vSphere UI

- Opened up an SSH session to my ESXi host

- Validate which SATP claimed the device:

esxcli storage nmp device list

In my case: VMW_SATP_ALUA_CX - Verify it is currently not recognized as SSD by typing the following command:

esxcli storage core device list -d naa.60060160916128003edc4c4e4654e011

should say: “Is SSD : False” - Set “Is SSD” to true:

esxcli storage nmp satp rule add -s VMW_SATP_ALUA_CX –device naa.60060160916128003edc4c4e4654e011 –option=enable_ssd - I reloaded claim rules and ran them using the following commands:

esxcli storage core claimrule load

esxcli storage core claimrule run - Validate it is set to true:

esxcli storage core device list -d naa.60060160916128003edc4c4e4654e011 - Now the device should be listed as SSD

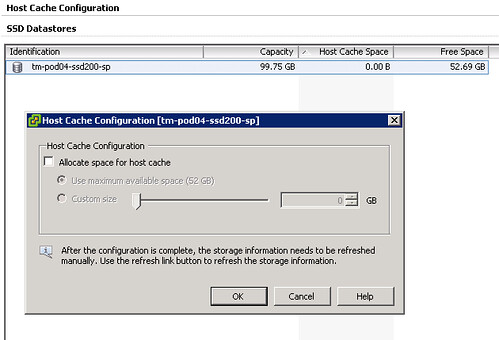

Next would be to enable the feature… When you go to your host and click on the “Configuration Tab” there should be a section called “Host Cache Configuration” on the left. When you’ve correctly tagged your SSD it should look like this:

Please note that I already had a VM running on the device and hence the reason it is showing some of the space as being in use on this device, normally I would recommend using a drive dedicated for swap. Next step would be enabling the feature and you can do that by opening the pop-up window (right click your datastore and select “Properties”). This is what I did:

- Tick “Allocate space for host cache”

- Select “Custom size”

- Set the size to 25GB

- Click “OK”

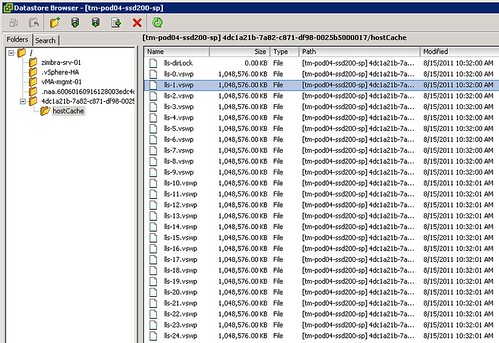

Now there is no science to this value as I just wanted to enable it and test the feature. What happened when we enabled it? We allocated space on this LUN so something must have been done with it? I opened up the datastore browser and I noticed a new folder was created on this particular VMFS volume:

Not only did it create a folder structure but it also created 25 x 1GB .vswp files. Now before we go any further, please note that this is a per host setting. Each host will need to have its own Host Cache assigned so it probably makes more sense to use a local SSD drive instead of a SAN volume. Some of you might say but what about resiliency? Well if your host fails the VMs will need to restart anyway so that data is no longer relevant, in terms of disk resiliency you should definitely consider a RAID-1 configuration. Generally speaking SAN volumes are much more expensive than local volumes and using local volumes also removes the latency caused by the storage network. Compared to the latency of a SSD (less than 100 μs), network latency can be significant. So lets recap that in a nice design principal:

Basic design principle

Using “Swap to host cache” will severely reduce the performance impact of VMkernel swapping. It is recommended to use a local SSD drive to elimate any network latency and to optimize for performance.

How does it work? Well fairly straight forward actually. When there is severe memory pressure and the hypervisor needs to swap memory pages to disk it will swap to the .vswp files on the SSD drive instead. Each of these, in my case, 25 files are shared amongst the VMs running on this host. Now you will probably wonder how you know if the host is using this Host Cache or not, that can of course simply be validated by looking at the performance statistics within vCenter. It contains a couple of new metrics of which “Swap in from host cache” and “Swap out to host cache” (and the “rate”…) metrics are most important to monitor. (Yes, esxtop has metrics as well to monitor it namely LLSWR/s and LLSWW/s)

What if you want to resize your Host Cache and it is already in use? Well simply said the Host Cache is optimized to allow for this scenario. If the Host Cache is completely filled memory pages will need to be copied to the regular .vswp file. This could mean that the process takes longer than expected and of course it is not a recommended practice as it will decrease performance for your VMs as these pages more than likely at some point will need to be swapped in. Resizing however can be done on the fly, no need to vMotion away your VMs. Just adjust the slider and wait for the process to complete. If you decide to complete remove all host cache for what ever reason than all relevant data will be migrated to the regular .vswp.

What if the Host Cache is full? Normally it shouldn’t even reach that state, but when you run out of space in the host cache pages will be migrated from your host cache to your regular vswap file and it is first in first out in this case, which should be the right policy for most workloads. Now chances of course of having memory pressure to the extend where you fill up a local SSD are small, but it is good to realize what the impact is. If you are going down the path of local SSD drives with Host Cache enabled and will be overcommitting it might be good to do the math and ensure that you have enough cache available to keep these pages in cache rather than on rotating media. I prefer to keep it simple though and would probably recommend to equal the size of your hosts memory. In the case of a host with 128GB RAM that would be a 128GB SSD. Yes this might be overkill, but the price difference between 64GB and 128GB is probably neglect-able.

Basic design principle

Monitor swap usage. Although “Swap to host cache” will reduce the impact of VMkernel swapping it will not eliminate it. Take your expected consolidation ratio into account including your HA (N-X) strategy and size accordingly. Or keep it simple and just use the same size as physical memory.

One interesting use case could be to place all regular swap files on very cheap shared storage (RAID5 of SATA drives) or even local SATA storage using the “VM swapfile location” (aka. Host local swap) feature. Then install a host cache for any host these VMs can be migrated to. This should give you the performance of a SSD while maintaining most of the cost saving of the cheap storage. Please note that the host cache is a per-host feature. Hence in the time of a vMotion all data from the cache will need to be transferred to the destination host. This will impact the time a vMotion takes. Unless your vMotions are time critical, this should not be an issue though. I have been told that VMware will publish a KB article with advise how to buy the right SSDs for this feature.

Summarizing, Swap to SSD is what people have been calling this feature and that is not what it is. This is a mechanism that caches memory pages to SSD and should be referred to as “Swap to host cache”. Depending on how you do the math all memory pages can be swapped to and from SSD. If there is insufficient space available memory pages will move over to the regular .vswp file. Use local SSD drives to avoid any latency associated with your storage network and to minimize costs.

Great new benefit of vSphere 5. Host swapping, despite what the Hyper-V guys say, is not pure evil — it’s a safety-relief valve that prevents serious problems.

Eric

Interesting. Good to know.

Are the guts of this feature the same technology that will be used for the forthcoming View caching feature? Seems like the same concept could be used for that, but rather than using it for swap, use it to configure the local ssd a read storage cache? I hope it isn’t just limited to View and works with other VDI solutions, but don’t have my hopes up too high.

Really interesting technology. Thanks for this eleborate write-up!

Just thinking out loud: I dont see the big point in this feature, as it complicates the design a bit (negligible) and needs some more money for the environment. Wouldnt it still be “easier” or even cheaper, to not overload the cluster/hosts? What about clusters with different sized hosts? Is SSD per host cheaper than some more RAM or 1-2 less VMs per hosts? What about the more and more promoted /pushed stateless designs with AutoDeploy etc.? Diskless hosts today, SSD-packed hosts tomorrow? SSD-space for Host Cache per host, PLUS SAN-space for the regular vswp-files, really? Centralizing today, tomorrow… 😉

On the other hand, I see the benefits of this feature (boot storms, highly consolidated environments, etc.), but also some drawbacks and questions.

As I said, JUST thinking out loud what swooshes through my mind 🙂

Hi Duncan

Really good content on the host cache. Why would I use host cache instead of buying more memory for my host? Quite simple to me. I need to run X amount of VMs, I see vmkernel swapping is happening, I have a limited budget.

I have seen statements at VMware that say that if you compare the cost of 1GB RAM to 1GB of disk space on an SSD the RAM costs are 8 to 10 times more expensive than using SSD.

A colleague at VMware also came up with this reason to use Host Cache:-

It’s also very useful during boot storms (VDI, or DR scenarios) where a sudden surge in memory pressure that can’t initially be met by TPS, compression etc. would have previously resulted in a transient spike in VMKernel swapping and poor performance. By offloading this onto SSD we can improve performance without having to stuff the servers full of physical RAM that will go unused for 90% of the time.

To answer the both of you: The reason to use it is to have another “safe guard” against performance issues when overcommiting.

For example where I work we do not use reservations as that would make our provisioning less dynamic. We try to not overcommit though, but when it does happen it is obviously better if the swap is 1000 times slower rather than 1000000 times slower.

Why not simply buy more memory? Because we have sized our hosts with possible impact during a HA event in mind. We simply don’t want “larger” hosts containing more VMs than we do now.

With this new feature we will most likely just replace the local disks, which are now 146gb 15k SAS disks, with ~50GB SSDs. The hosts have 256GB ram, and we seldom see more than a gig or two swapped out, so it should be more than sufficient.

Duncan,

Thanks for the post. I have one question though. I haven’t played around with swap to host cache in th lab yet. So when you have a local SSD dedciated for VM swap files, do the original .vswp file still get created. Basically this is what I am trying to ask. Lest assume I have swap to host host cache configured and a local SSD datastore has been assigned for this. When I power on my VM (2 GB memory), it will swap on the SSD if need be, but will I still have a 2 GB .vswp file in the VM folder (assuming this VM has no reservation and the swap file is set to be saved to default location).

I am asking this to see if we will be freeing up space on the datastores where the VMs reside or will this be an addititonal space eater which will provide better performance of course.

Thanks,

Bilal

It is called “swap to host cache”… so it swaps pages to a cache and if needed these pages will flow to the regular .vswp. So these .vswp files will be created, but you could of course store them on ultra cheap slow storage.

Great to see you talk about this. I’ve been trying to get people excited about the potential of this idea for 12 months. Maybe we’re starting to take it more seriously.

Hasn’t this been possible since 3.0 though, albeit on a per-VM basis and not through the GUI, by changing the vm swap file location? Is this just a per host version of the same, or something different?

Did you read the article? 🙂

I have the same question, and I don’t really see where the article addresses our confusion. I see that you made a distinction of mentioning it as the vmkernel swapfile location (as opposed to the Virtual Machine swapfile location).

Can you compare/contrast this feature with what was previously available in 4.1?

–In 4.1 VIC under Home–>Inventory–>Hosts and Clusters view, click on a Host, click on the Configuration tab, under the Software menu section click on Virtual Machine Swapfile Location.

—-I can already specify this location to be a datastore that uses a local SSD disk/RAID array (for low latency, as opposed to SAN which would increase latency a bit as you mentioned)

And of course, I can manually specify on a per-VM level to use a different datastore for swap file storage.

So in both v4.1 and v5.0 I am able to specify a local SSD based datastore as a swapfile location, on a per-host basis. Perhaps in v5.0 it is a little easier/faster to do the assignment?

What’s new here that I’m missing?

Yes you can specify the location for the .vswp file. But that means the full swap file will be located their. When you have 80 VMs with 4GB per host than you need 320GB SSD at minimum. When using this cache feature you could use a 80 / 128 GB SSD.

OK that makes a little more sense… The feature permits just a portion of a VM’s swap file to be present (presumably with the remainder of the swap on the VMs datastore?)

I wonder why I haven’t seen a succinct summary of this feature yet in that context. It seems a pretty important detail. Thanks Duncan.

FYI though for your other readers… a ZFS volume that has SSD log / cache disks (mounted as a datastore via iscsi or nfs) would accomplish something very close to this functionality on any datastore in v4.1 or any other version of vmware.

Not really,

This feature adds an extra swap location besides your regular swap! This extra location is a cache location for memory pages which are to be swapped to disk. In other wods, your regular .vswp file will be created. If you have 4GB of unreserved memory a 4GB .vswp will be created on the swap location. Additionally you can chose to create an extra location for caching swapped pages.

Thanks for sharing Duncan. Always an excellent source.

Q1. Which use case does not require vMotion to be completed as soon as possible? While the time might not be critical, we still want it to be done without unnecessary delay as it impacts the VM performance, impacts DRS schedule, impact the ESXi hosts involved, etc.

Q2. If all VM needs to be evacuated from a host, this might result in additional 50-100 GB of data. I thought since this is technically a cache of the original vswp file, it would be recreated in the new host. Isn’t faster to recreate since the destination ESXi host still need to write it anyway?

Many thanks from Singapore

e1

Looking into if this is a way to juggle many VMs on a relatively cheap Z68 based motherboard with only 16GB of RAM (for lab/self-training, not for production really). In other words, using a SandForce SF-2200 based SSD on a decent RAID controller with SATA 3 (6Gbps). Then I can perhaps use this “swap to host cache” capability to help ease the pain of having only 16GB of actual RAM, without a huge performance penalty when overcommitment gets bad.

Will need to do some testing, meanwhile, here’s what I wrote up so far:

http://tinkertry.com/vsphere5hostcacheconfiguration

http://tinkertry.com/ssdscompared4srt

http://tinkertry.com/vzilla

Sorry for the delayed post, it was caught up in the spam box due to the links.

Yes I do believe that would severely reduce the performance penalty! This could indeed help you to cram the box without the additional memory required.

Creation of my virtualization/backup lab underway:

http://tinkertry.com/vzillaunderconstruction

At the moment, rehearsing iSCSI. That is, figuring out a way to go beyond 2TB for a single VM that needs the capacity (PC backups). Thinking VMware or LeftHand Networks iSCSI appliance, with iSCSI presented to just this one VM that needs it for non-boot partition, we’ll see how it performs before I put eggs in that basket…

I probably should have spelled out that it’s VSA I’m looking at first, aka, “VMware vSphere™ Storage Appliance” from here:

http://www.vmware.com/products/datacenter-virtualization/vsphere/vsphere-storage-appliance/overview.html

Talk about away to easy the VTAX talk. Right size the host use swap to host cache and vroom.!!

DCD270 (On twitter) Joe Tietz

So I had no luck on ESXi 5 update 1 running the command “esxcli storage nmp satp rule add -s VMW_SATP_ALUA_CX –device naa.60060160916128003edc4c4e4654e011 –option=enable_ssd”

I did however get it to work by running it like:

esxcli storage nmp satp rule add -s VMW_SATP_ALUA_CX –d naa.60060160916128003edc4c4e4654e011 –o enable_ssd

Obviously I used my own disk identifier aswell. I also changed the -s option to VMW_SATP_LOCAL to reflect my local ssd drives. Also took a reboot to get the ssd is true to show up

Hello Duncan

First of all, thanks for the great article …

let me ask you, your opinions about this feature and some issues I have. But first let me tell you a bit about my particular environment.

Here we are a Cloud Provider with VMware Cloud Director 1.5.1, and I have a 12 hosts, vCenter 5.0 – 455964 Cluster, with all hosts ESXi 5.0.0 — 702118, Cisco B200M2, with two X5680 each, 256 GB Ram, no local HD, and booting from SAN. All Hosts usually at 50% Used CPU, and 85% to 90% Memory used. Roughly 700 VMs, and 100 vOrgs.

That means among other things, we use Resource pools extensively.

Also that our Storage system is crying mercy ….

on that premise …. I would like to analize the possible use of VM SWapping and VMKernel Swapping, relocation…. viewing it more from the relocating of IOPs perpective rather than from the performance perspective.

if we manage to do the investment and get the SSD necesary to do this ….

do you think we will see some releif on the storage due to the IOPs ( from vKernel Swapping and –some– VM Swapping) relocated from the SAN Storage to the Local SSDs ????

is it possible to messure it right now ( the Swapp use — therefore the IOPs used) before doing the Investment, in orther to predict reasonably what will be the impact of such a change ??

any help welcome

Thanks in advance

and ance again thanks for your deep and interesting articles

Gustavo.

I have two ESXi 5.1, had enable SSD on its local drive. When trying to name it (would like to keep same name such as LocSt_SSD01) i got a message “the name LocSt_SSD01 already exists. Does it mean that when hosts are in the cluster they can see each other local SSD drives?

Thank you

Hi,

i was not able to repoduce your steps. I think you missed one.

Before reloaded claim rules:

esxcli storage core claiming unclaim -t device -d naa.60060160916128003edc4c4e4654e011

Bye

Gonzalo

Hi FLoks,

can some one tell me will a storage vmotion possible between SSD drive and SATA drive Vsphere 5.1 GA its a FC SAN S Vmotion fails at 99% any idea to resolve the issue