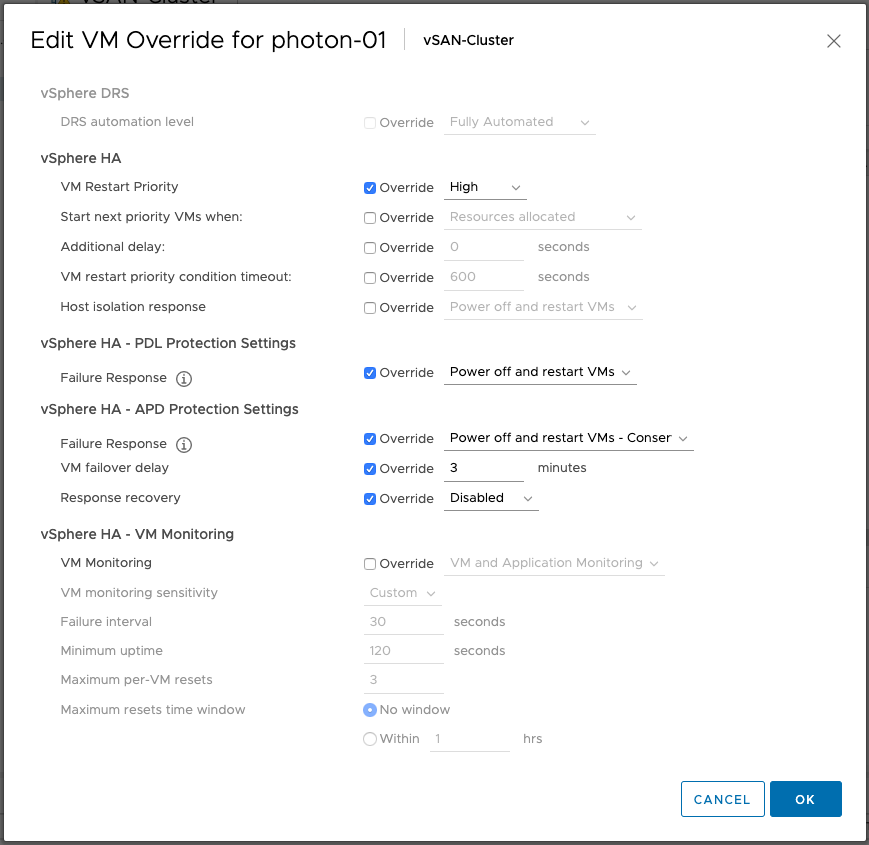

Years ago we had various customers that complained about the fact that they had VMs that were disabled for HA and that these VMs would not be re-registered when the host they were registered on would fail. I can understand why you would want the VMs to be re-registered as this makes it easier to power-on those VMs when a host has failed. If the VM would not be re-registered automatically, and the host to which it was registered has failed, you would have to manually register the VM and only then would you be able to power on that VM.

Now, it doesn’t happen too often, but there are also situations where certain VMs are disabled for HA restarts (or powered off) and customers don’t want to have those VMs to be re-registered as these VMs are only allowed to run on one particular host. In that particular case you can simply disable the re-registering of HA disabled VMs through the use of an advanced setting. The advanced setting for this, and the value to use, is the following:

das.reregisterRestartDisabledVMs - false