I’ve seen a few questions around this, is it possible, or supported, to use vSAN Datastore Sharing aka HCI Mesh to connect OSA with ESA? Or of course the other way around. I can be brief about this, no it is not supported and it isn’t possible either. vSAN HCI Mesh or Datastore Sharing uses the vSAN proprietary protocol called RDT. When using vSAN OSA a different version is used of RDT than with vSAN ESA, and these are unfortunately not compatible at the moment. Meaning that as a result you cannot use vSAN Datastore Sharing to share your OSA capacity with an ESA cluster, or the other way around. Hope that clarify things.

How to stop vCLS VMs from running on a vSphere HA Failover Host?

I’ve had this question twice in about a week, which means that it is time to write a quick post. How do you stop vCLS VMs from running on a vSphere HA Failover Host? For those who don’t know, a vSphere HA Failover Host is a host which is used when a failure has occurred and vSphere HA needs to restart VMs. In some cases customers (but usually Cloud partners) want to prevent the use of these hosts by any workload as it could impact the cost of usage of the platform.

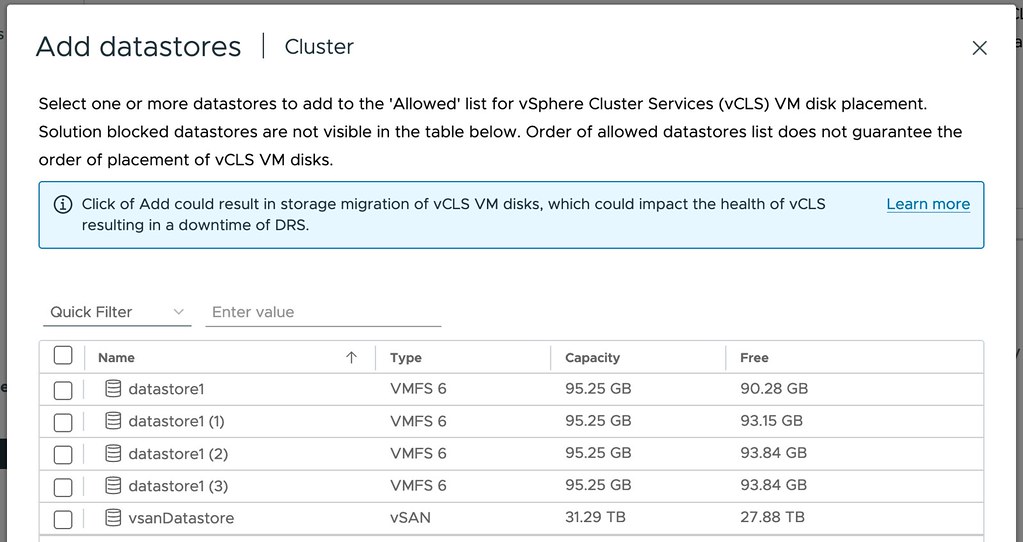

Unfortunately within the UI you cannot specify that vCLS VMs cannot run on specific hosts, you can limit the vCLS VMs from running next to other VMs, but not hosts. There is however an option to specify which datastores the VMs can be stored on, and this is a potential way of limiting which hosts the VMs can run on as well. How? Well if you create a datastore that is not presented to the designated vSphere HA Failover Host then the vCLS VM cannot run on that host as the host cannot access the datastore. It is a workaround for the problem, you can find out more about the datastore placement mechanism for vCLS in this document here. Do note, as stated, those vCLS VMs won’t be able to run on those hosts, so if the rest of the cluster fails and only the Failover Host is left the vCLS VMs will not be powered on. This means that DRS will not function while those VMs are unavailable.

I’ve contacted vSphere HA/vCLS product management to see if we can get this fixed somehow more elegantly in the product, and it is being worked on.

Unexplored Territory Podcast Episode 067 – Introducing Rubrik!

vSAN ESA and the minimum number of hosts with RAID-1/5/6

I had a meeting last week with a customer and a question came up around the minimum number of hosts a cluster requires in order to use. particular RAID configuration for vSAN. I created a table for the customer and a quick paragraph on how this works and figured I would share it here as well.

With vSAN ESA VMware introduced a new feature called “Adaptive RAID-5”. I described this feature in this blog post here. In short, depending on the size of the cluster a RAID-5 configuration will either be a 2+1 scheme or a 4+1 scheme. There’s no longer a 3+1 scheme with vSAN ESA. Of course, there’s still the ability to use RAID-1 and RAID-6 as well, the RAID-1 and RAID-6 schemes remained unchanged.

When it comes to vSAN ESA, below are the number of hosts required for a particular RAID scheme. Do note, that with RAID-5, of the size of the cluster changes (higher of lower) then the scheme may also change as described in the linked article above.

| Failures To Tolerate | Object Configuration | Minimum number of hosts | Capacity of VM size |

|---|---|---|---|

| No data redundancy | RAID-0 | 1 | 100% |

| 1 Failure (Mirroring) | RAID-1 | 3 | 200% |

| 1 Failure (Erasure Coding) | RAID-5, 2+1 | 3 | 150% |

| 1 Failure (Erasure Coding) | RAID-5, 4+1 | 6 | 125% |

| 2 Failures (Erasure Coding) | RAID-6, 4+2 | 6 | 150% |

| 2 Failures (Mirorring) | RAID-1 | 5 | 300% |

| 3 Failures (Mirorring) | RAID-1 | 7 | 400% |

Unexplored Territory Episode 065 – Jatin Purohit discussing Oracle Cloud VMware Solution

For episode 065 of the Unexplored Territory Podcast I invited Jatin Purohit to discuss Oracle Cloud VMware Solution, and what was introduced since we had Richard Garsthagen on as a guest. Jatin went over all the details, and shared some great use cases with us. Make sure to listen to the episode via your favorite podcast app, or the embedded player below. We are now starting to plan for the upcoming episodes, if you want to be a guest and have an interesting story, or solution, to share, then do not hesitate to reach out.