This is one of those questions that comes up every now and then, I have written about this before, but it never hurts to repeat some of it. The comment I got was around rebuild time of failed drives in VSAN, surely it takes longer than with a “legacy” storage system. The answer of course is: it depends (on many factors).

But what does it depend on? Well it depends on what exactly we are talking about, but in general I think the following applies:

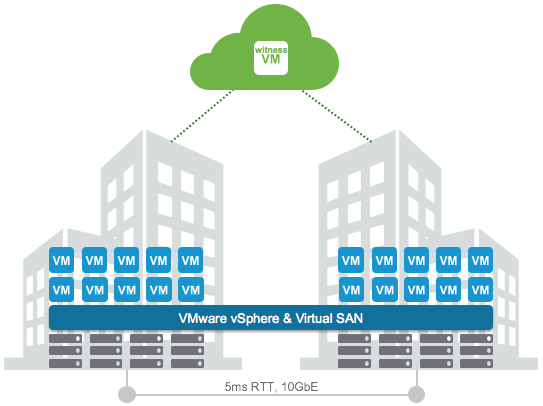

With VSAN components (copies of objects, in other words copies of data) are placed across multiple hosts, multiple diskgroups and multiple disks. Basically if you have a cluster of lets say 8 hosts with 7 disks each and you have 200 VMs then the data of those 200 VMs will be spread across 8 hosts and 56 disks in total. If one of those 56 disks happens to fail then the data that was stored on that disk would need to be reprotected. That data is coming from the other 7 hosts which is potentially 49 disks in total. You may ask, why not 55 disks? Well because replica copies are never stored on the same hosts for resiliency purposes, look at the diagram below where a single object is split in to 2 data components and a witness, they are all located on different hosts!

We do not “mirror” disks, we mirror the data itself, and the data can and will be place anywhere. This means that when a failure has occurred of a disk within a diskgroup on a host all remaining disk groups / disk / hosts will be helping to rebuild the impacted data, which is 49 disks potentially. Note that not only will disks and hosts containing impacted objects help rebuilding the data, all 8 hosts and 55 disks will be able to receive the replica data!

Now compare this to a RAID set with a spare disk. In the case of a spare disk you have 1 disk which is receiving all the data that is being rebuild. That single disk can only take an X number of IOPS. Lets say it is a really fast disk and it can take 200 IOPS. Compare that to VSAN… Lets say you used really slow disks which only do 75 IOPS… Still that is (potentially) 49 disks x 75 IOPS for reads and 55 disks for writes.

That is the major difference, we don’t have a single drive as a designated hot spare (or should I say bottleneck?), we have the whole cluster as a hot spare! As such rebuild times when using similar drives should always be faster with VSAN compared to traditional storage.