vSphere 5.5 was just announced and of course there are a bunch of new features in there. One of the features which I think people will appreciate is vSphere Flash Read Cache (vFRC), formerly known as vFlash. vFlash was tech previewed last year at VMworld and I recall it being a very popular session. In the last 6-12 months host local caching solutions have definitely become more popular and interesting as SSD prices keep dropping and thus investing in local SSD drives to offload IO gets more and more interesting. Before anyone asks, I am not going to do a comparison with any of the other host local caching solutions out there. I don’t think I am the right person for that as I am obviously biased.

As stated, vSphere Flash Read Cache is a brand new feature which is part of vSphere 5.5. It allows you to leverage host local SSDs and turn that in to a caching layer for your virtual machines. The biggest benefit of using host local SSDs of course is the offload of IO from the SAN to the local SSD. Every read IO that doesn’t need to go to your storage system means resources can be used for other things, like for instance write IO. That is probably the one caveat I will need to call out, it is “write through” caching only at this point, so essential a read cache system. Now, by offloading reads, potentially it could help improving write performance… This is not a given, but could be a nice side effect.

Just a couple of things before we get in to configuring it. vFlash aggregates local flash devices in to a pool, this pool is referred too as a “virtual flash resource” in our documentation. So in other words, if you have 4 x 200 GB SSD you end up with a 800GB virtual flash resource. This virtual flash resource has a filesystem sitting on top of it called “VFFS” aka “Virtual Flash File System”. As far as I know it is a heavily flash optimized version of VMFS, but don’t pin me on this one as I haven’t broken it down yet.

So now that I know what it is and does, how do I install it, what are the requirements and limitations? Well lets start with the requirements and limitations first.

Requirements and limitations:

- vSphere 5.5 (both ESXi and vCenter)

- SSD Drive / Flash PCIe card

- Maximum of 8 SSDs per VFFS

- Maximum of 4TB physical Flash-based device size

- Maximum of 32TB virtual Flash resource total size (8x4TB)

- Cumulative 2TB VMDK read cache limit

- Maximum of 400GB of virtual Flash Read Cache per Virtual Machine Disk (VMDK) file

So now that we now the requirements, how do you enable / configure it? Well as with most vSphere features these days the setup it fairly straight forward and simple. Here we go:

- Open the vSphere Web Client

- Go to your Host object

- Go to “Manage” and then “Settings”

- All the way at the bottom you should see “Flash Read Cache Resource Management”

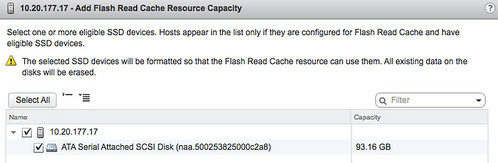

- Click “Add Capacity”

- Select the appropriate SSD and click OK

- Now you have a cache created, repeat for other hosts in your cluster. Below is what your screen will look like after you have added the SSD.

Now you will see another option below “Flash Read Cache Resource Management” and it is called “Cache Configuration” this is for the “Swap to host cache” / “Swap to SSD” functionality that was introduced with vSphere 5.0.

Now that you have enabled vFlash on your host, what is next? Well you enable it on your virtual machine, yes I agree it would have been nice to enable it for a full cluster or for a datastore as well but this is not part of the 5.5 release unfortunately. It is something that will be added at some point in the future though. Anyway, here is how you enable it on a Virtual Machine:

- Right click the virtual machine and select “Edit Settings”

- Uncollapse the harddisk you want to accelerate

- Go to “Flash Read Cache” and enter the amount of GB you want to use as a cache

- Note there is an advanced option, at this section you can also select the block size

- The block size could be important when you want to optimize for a particular application

Not too complex right? You enable it on your host and then on a per virtual machine level and that is it… It is included with Enterprise Plus from a licensing perspective, so those who are at the right licensing level get it “for free”.