I haven’t spend a lot of time looking at VMFS lately. I was looking in to what was new for vSphere 6.5 and then noticed a VMFS section. Good to see there is still being worked on new features and functionality for the core vSphere file system. So what is new with VMFS 6:

- Support for 4K Native Drives in 512e mode

- SE Sparse Default

- Automatic Space Reclamation

- Support for 512 devices and 2000 paths (versus 256 and 1024 in the previous versions)

- CBRC aka View Storage Accelerator

Lets look at them one by one, I think support for 4K native drives in 512e mode speaks for itself. Sizes of spindles keep growing and these new “advanced format” drives come with a 4K byte sector instead of the usual 512 byte sector, which is primarily for better handling of media errors. As of vSphere 6.5 this is now fully supported but note that for now it is only supported when running in 512e mode! The same applies to Virtual SAN in the 6.5 release, only supported in 512e mode. This basically means that 512 byte sectors is being emulated on a 4k drive. Hopefully we will have more on full support for 4Kn for vSphere/VSAN soon.

From an SE Sparse perspective, right now SE Sparse is used primarily View and for LUNs larger than 2TB. When on VMFS 6 the default will be SE Sparse. Not much more to it than that. If you want to know more about SE Sparse, read this great post by Cormac.

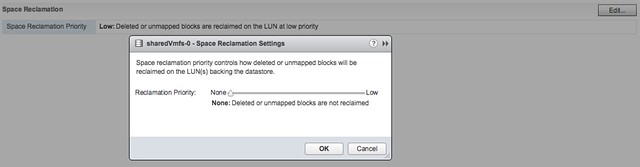

Automatic Space Reclamation is something that I know many of my customers have been waiting for. Note that this is based on VAAI Unmap which has been around for a while and allows you to unmap previously used blocks. In other words, storage capacity is reclaimed and released to the array so that when needed other volumes can use these blocks. In the past you needed to run a command to reclaim the blocks, now this has been integrated in the UI and can simply be turned on or off. Oh, you can find this in the UI when you go to your datastore object and then click configure, you can set it to “none” which means you disable it, or you set it to low in the UI as shown in the screenshot below.

If you prefer “esxcli” then you can do the following to get the info of a particular datastore (sharedVmfs-0 in my case) :

esxcli storage vmfs reclaim config get -l sharedVmfs-0 Reclaim Granularity: 1048576 Bytes Reclaim Priority: low

Or set the datastore to a particular level, note that using esxcli you can also set the priority to medium and high if desired:

esxcli storage vmfs reclaim config set -l sharedVmfs-0 -p high

Next up, support for 512 Devices and 2000 Paths. In previous versions the limit was 256 devices and 1024 paths and some customers were hitting this limit in their cluster. Especially when RDMs are used or people have a limited number of VMs per datastore, or maybe 8 paths to each device are used it becomes easy to hit those limits. Hopefully with 6.5 that will not happen anytime soon. On the other hand, personally I would hope more and more people are considering moving towards either VSAN or Virtual Volumes.

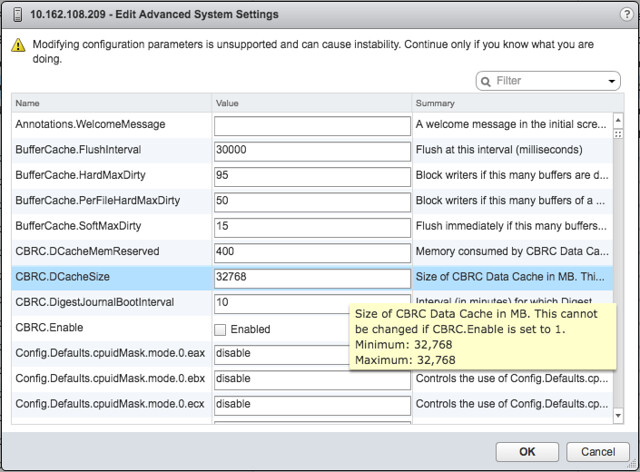

This is one I accidentally ran in to and not really directly related to VMFS but I figured I would add it here anyway otherwise I would forget about it. In the past CBRC aka View Storage Accelerator was limited to 2GB of memory cache per host. I noticed in the advanced settings that it now is set to 32GB, which is a big difference compared to the 2GB in previous releases. I haven’t done any testing, but I assume our EUC team has and hopefully we will see some good performance data on this big increase soon.

And that was it… some great enhancements in the core storage space if you ask me. And I am sure there was even more, and if I find out more details I will share those with you as well.