This seems to becoming a true series, introducing startups… Now in the case of SolidFire I am not really sure if I should use the word startup as they have been around since 2010. But then again, it is not a consumer solution that they’ve created and enterprise storage platforms do typically take a lot longer to develop and mature. SolidFire was founded in 2010 by Dave Wright who discovered a gap in the current storage market when he was working for Rackspace. The opportunity Dave saw was in the Quality of Service area. Not many storage solutions out there could provide a predictable performance in almost every scenario, and were designed for multi-tenancy and offered a rich API. Back then the term Software Defined Storage wasn’t coined yet, but I guess it is fair to say that is how we would describe it today. This actually how I got in touch with SolidFire. I wrote various articles on the topic of Software Defined Storage, and tweeted about this topic many times, and SolidFire was one of the companies who consistently joined the conversation. So what is SolidFire about?

This seems to becoming a true series, introducing startups… Now in the case of SolidFire I am not really sure if I should use the word startup as they have been around since 2010. But then again, it is not a consumer solution that they’ve created and enterprise storage platforms do typically take a lot longer to develop and mature. SolidFire was founded in 2010 by Dave Wright who discovered a gap in the current storage market when he was working for Rackspace. The opportunity Dave saw was in the Quality of Service area. Not many storage solutions out there could provide a predictable performance in almost every scenario, and were designed for multi-tenancy and offered a rich API. Back then the term Software Defined Storage wasn’t coined yet, but I guess it is fair to say that is how we would describe it today. This actually how I got in touch with SolidFire. I wrote various articles on the topic of Software Defined Storage, and tweeted about this topic many times, and SolidFire was one of the companies who consistently joined the conversation. So what is SolidFire about?

SolidFire is a storage company, they sell a storage systems and today they offer two models namely the SF3010 and the SF6010. What is the difference between these two? Cache and capacity! With the SF3010 you get 72Gb of cache per node and it uses 300GB SSD’s where the SF6010 gives you 144GB of cache per node and uses 600GB SSD’s. Interesting? Well to a certain point I would say, SolidFire isn’t really about the hardware if you ask me. It is about what is inside the box, or boxes I should say as the starting point is always 5 nodes. So what is inside?

Architecture

SolidFire’s architecture is based on a scale-out model and of course flash, in the form of SSD. You start out with 5 nodes and you can go up to 100 nodes, all connected to your hosts via iSCSI. Those 100 nodes would be able to provide you 5 million IOps and about 2.1 Petabyte of capacity. Each node that is added linearly scales performance and of course adds capacity. Of course SolidFire offers deduplication, compression and thin provisioning. Considering it is a scale-out model it is probably not needed to point this out, but dedupe and compression are cluster wide. Now the nice thing about the SolidFire architecture is that they don’t use a traditional RAID, this means that the long rebuild times when a disk fails or a node fails do not apply to SolidFire. Rather SolidFire evenly distributes data across all disk and nodes, so when a single disk fails or even a node fails rebuild time is not constraint due to a limited amount of resources but many components can help in parallel to get back to a normal state. What I liked most about their architecture is that it already closely aligns with VMware’s Virtual Volume (VVOL) concept, SolidFire is prepared for VVOLs when it is released.

Quality of Service

I already has briefly mentioned this, but Quality of Service (QoS) is one of the key drivers of the SolidFire solution. It revolves around having the ability to provide an X amount of capacity with an X amount of performance (IOps). What does this mean? SolidFire allows you to specify a minimum and maximum number of IOps for a volume, and also a burst space. Lets quote the SolidFire website as I think they explain it in a clear way:

- Min IOPS – The minimum number of I/O operations per-second that are always available to the volume, ensuring a guaranteed level of performance even in failure conditions.

- Max IOPS – The maximum number of sustained I/O operations per-second that a volume can process over an extended period of time.

- Burst IOPS – The maximum number of I/O operations per-second that a volume will be allowed to process during a spike in demand, particularly effective for data migration, large file transfers, database checkpoints, and other uneven latency sensitive workloads.

Now I do want to point out here that SolidFire storage systems have no “form of admission control” when it comes to QoS. Although it is mentioned that there is a guaranteed level of performance this is up to the administrator, you as the admin will need to do the math and not overprovision from a performance point of view if you truly want to guarantee a specific performance level. If you do, you will need to take failure scenarios in to account!

One thing that my automation friends William Lam and Alan Renouf will like is that you can manage all these settings using their REST-based API.

(VMware) Integration

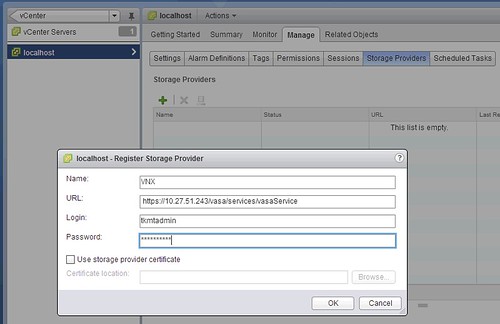

Ofcourse during the conversation integration came up. SolidFire is all about enabling their customers to automate as much as they possibly can and have implemented a REST-based API. They are heavily investing in for instance integration with Openstack but also with VMware. They offer full support for the vSphere Storage APIs – Storage Awareness (VASA) and are also working towards full support for vSphere Storage APIs – Array Integration (VAAI). Currently not all VAAI primitives are supported but they promised me that this is a matter of time. (They support: Block Zero’ing, Space Reclamation, Thin Provisioning. See HCL for more details.) On top of that they are also looking at the future and going full steam ahead when it comes to Virtual Volumes. Obvious question from my side: what about replication / SRM? This is being worked on, hopefully more news about this soon!

Now with all this integration did they forget about what is sitting in between their storage system and the compute resources? In other words what are they doing with the network?

Software Defined Networking?

I can be short, no they did not forget about the network. SolidFire is partnering with Plexxi and Arista to provide a great end-to-end experience when it comes to building a storage environment. Where with Arista currently the focus is more on monitoring the the different layers Plexxi seems to focus more on the configuration and optimization for performance aspect. No end-to-end QoS yet, but a great step forward if you ask me! I can see this being expanded in the future

Wrapping up

I had already briefly looked at SolidFire after the various tweets we exchanged but this proper introduction has really opened my eyes. I am impressed by what SolidFire has achieved in a relatively short amount of time. Their solution is all about customer experience, that could be performance related or the ability to automate the full storage provisioning process… their architecture / concept caters for this. I have definitely added them to my list of storage vendors to visit at VMworld, and I am hoping that those who are looking in to Software Defined Storage solutions will do the same as SolidFire belongs on that list.

I have this search column open on twitter with the term “software defined storage”. One thing that kept popping up in the last couple of days was a tweet from various IBM people around how

I have this search column open on twitter with the term “software defined storage”. One thing that kept popping up in the last couple of days was a tweet from various IBM people around how