Recently it was announced that SIOC was going to be deprecated and that SDRS IO Load Balancing as a result would also be deprecated. The following was mentioned in the release notes of 8.0 Update 3:

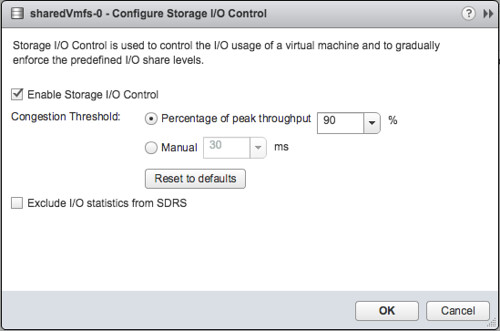

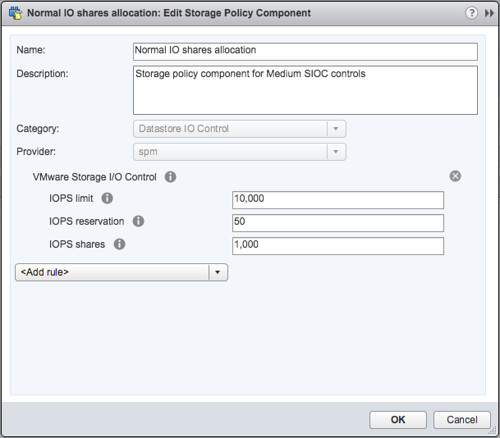

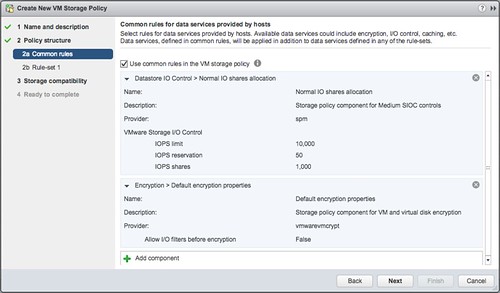

Deprecation of Storage DRS Load Balancer and Storage I/O Control (SIOC): The Storage DRS (SDRS) I/O Load Balancer, SDRS I/O Reservations-based load balancer, and vSphere Storage I/O Control Components will be deprecated in a future vSphere release. Existing 8.x and 7.x releases will continue to support this functionality. The deprecation affects I/O latency-based load balancing and I/O reservations-based load balancing among datastores within a Storage DRS datastore cluster. In addition, enabling of SIOC on a datastore and setting of Reservations and Shares by using SPBM Storage policies are also being deprecated. Storage DRS Initial placement and load balancing based on space constraints and SPBM Storage Policy settings for limits are not affected by the deprecation.

So why do I bring this up if this was announced a while back? Well, apparently, not everyone had seen that announcement, and not everyone fully understands the impact. For Storage DRS (SDRS), this means that essentially, ‘capacity balancing’ remains available, but anything related to performance will not be available in a next major release. Also, noisy neighbor handling through SIOC with shares and, for instance, IO reservations will no longer be available.

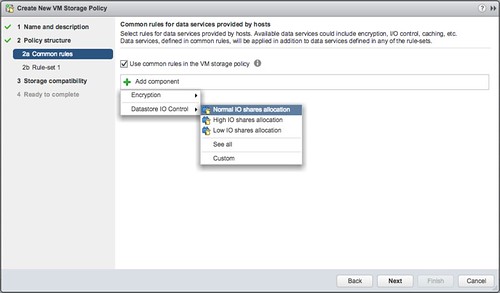

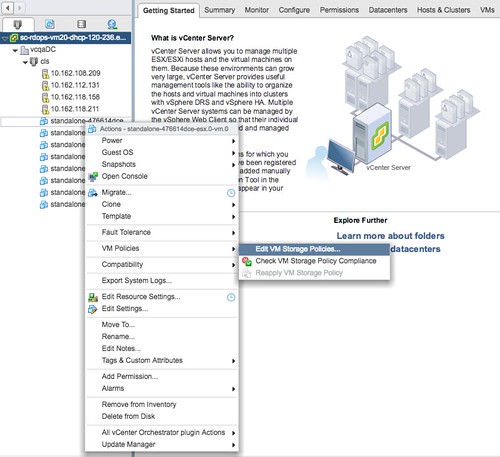

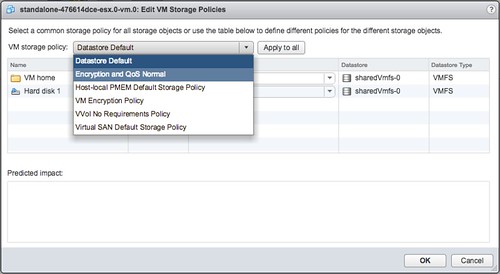

Some of you may also have noticed already that in the UI on a per VM level the ability to specify the IOPS limit had also disappeared. So what does this mean for IOPS Limits in general? Well, that functionality will remain available through Policy Based Management (SPBM) as it is today. So, if you set IOPS limits on a per VM basis in vSphere 7, if you upgrade to vSphere 8 you will need to use the SPBM policy option! This IOPS Limit option in SPBM will remain available, even though in the UI it shows up under “SIOC” it is actually applied through the disk scheduler on a per-host basis.