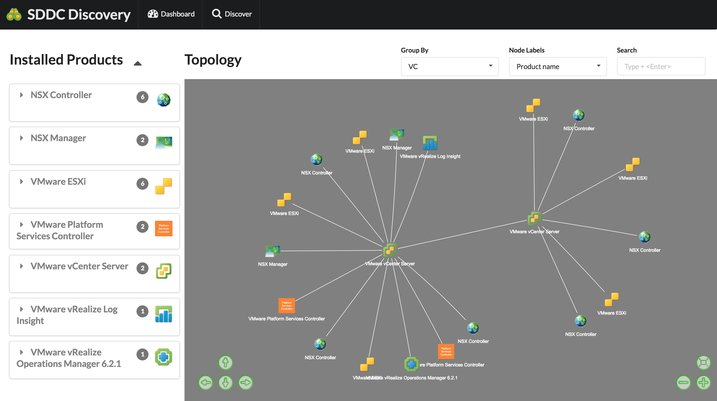

You still recall the days where you had the awesome map view in the vCenter Client? That unfortunately was removed from the Web Client. A shame as it was very useful for troubleshooting and seeing relationships between components of their stack. Guess what?! We just released a fling where we show you a map of your VMware products and how they are connected. I think it is brilliant to have that fling made available, and it would even be better if we can get this in to our products at some point. If you want to test it out, go to the SDDC Discovery Tool fling page and download it, don’t forget to leave feedback!

You still recall the days where you had the awesome map view in the vCenter Client? That unfortunately was removed from the Web Client. A shame as it was very useful for troubleshooting and seeing relationships between components of their stack. Guess what?! We just released a fling where we show you a map of your VMware products and how they are connected. I think it is brilliant to have that fling made available, and it would even be better if we can get this in to our products at some point. If you want to test it out, go to the SDDC Discovery Tool fling page and download it, don’t forget to leave feedback!

Awesome tool if you ask me, very useful, and if I can do a feature request, I would love to see some sort of grouping for the hosts which belong to the same cluster. Maybe stack the hosts in a cluster object and allow to drill down to see them all. Just a thought.

I’ve been thinking about the term Software Defined Data Center for a while now. It is a great term “software defined” but it seems that many agree that things have been defined by software for a long time now. When talking about SDDC with customers it is typically referred to as the ability to abstract, pool and automate all aspects of an infrastructure. To me these are very important factors, but not the most important, well at least not for me as they don’t necessarily speak to the agility and flexibility a solution like this should bring. But what is an even more important aspect?

I’ve been thinking about the term Software Defined Data Center for a while now. It is a great term “software defined” but it seems that many agree that things have been defined by software for a long time now. When talking about SDDC with customers it is typically referred to as the ability to abstract, pool and automate all aspects of an infrastructure. To me these are very important factors, but not the most important, well at least not for me as they don’t necessarily speak to the agility and flexibility a solution like this should bring. But what is an even more important aspect? With Virtual Volumes placement of a VM (or VMDK) is based on how the policy is constructed and what is defined in it. The Storage Policy Based Management engine gives you the flexibility to define policies anyway you like, of course it is limited to what your storage system is capable of delivering but from the vSphere platform point of view you can do what you like and make many different variations. If you specify that the object needs to thin provisioned, or has a specific IO profile, or needs to be deduplicated or… then those requirements are passed down to the storage system and the system makes its placement decisions based on that and will ensure that the demands can be met. Of course as stated earlier also requirements like QoS and availability are passed down. This could be things like latency, IOPS and how many copies of an object are needed (number of 9s resiliency). On top of that, when requirements change or when for whatever reason SLA is breached then in a requirements driven environment the infrastructure will assess and remediate to ensure requirements are met.

With Virtual Volumes placement of a VM (or VMDK) is based on how the policy is constructed and what is defined in it. The Storage Policy Based Management engine gives you the flexibility to define policies anyway you like, of course it is limited to what your storage system is capable of delivering but from the vSphere platform point of view you can do what you like and make many different variations. If you specify that the object needs to thin provisioned, or has a specific IO profile, or needs to be deduplicated or… then those requirements are passed down to the storage system and the system makes its placement decisions based on that and will ensure that the demands can be met. Of course as stated earlier also requirements like QoS and availability are passed down. This could be things like latency, IOPS and how many copies of an object are needed (number of 9s resiliency). On top of that, when requirements change or when for whatever reason SLA is breached then in a requirements driven environment the infrastructure will assess and remediate to ensure requirements are met. When I look at discussions being held around whether server side caching solutions is preferred over an all-flash arrays, which is just another form factor discussion if you ask me, the only right answer that comes to mind is “it depends”. It depends on what your business requirements are, what your budget is, if there are any constraints from an environmental perspective, hardware life cycle, what your staff’s expertise / knowledge is etc etc. It is impossible to to provide a single answer and solution to all the problems out there. What I realized is that what the software-defined movement actually brought us is choice, and in many of these cases the form factor is just a tiny aspect of the total story. It seems to be important though for many people, maybe still an inheritance from the “server hugger” days where hardware was still king? Those times are long gone though if you ask me.

When I look at discussions being held around whether server side caching solutions is preferred over an all-flash arrays, which is just another form factor discussion if you ask me, the only right answer that comes to mind is “it depends”. It depends on what your business requirements are, what your budget is, if there are any constraints from an environmental perspective, hardware life cycle, what your staff’s expertise / knowledge is etc etc. It is impossible to to provide a single answer and solution to all the problems out there. What I realized is that what the software-defined movement actually brought us is choice, and in many of these cases the form factor is just a tiny aspect of the total story. It seems to be important though for many people, maybe still an inheritance from the “server hugger” days where hardware was still king? Those times are long gone though if you ask me.