![]() Today a new startup is revealed named Coho Data, formerly known as Convergent.io. Coho Data was founded by Andrew Warfield, Keir Fraser and Ramana Jonnala. For those who care, they are backed by Andreessen Horowitz. Probably most known for the work they did at Citrix on Xenserver. What is it they introduced / revealed this week?

Today a new startup is revealed named Coho Data, formerly known as Convergent.io. Coho Data was founded by Andrew Warfield, Keir Fraser and Ramana Jonnala. For those who care, they are backed by Andreessen Horowitz. Probably most known for the work they did at Citrix on Xenserver. What is it they introduced / revealed this week?

Coho Data introduces a new scale-out hybrid storage solution (NFS for VM workloads). With hybrid meaning a mix of SATA and SSD. This for obvious reasons, SATA bringing you capacity and flash providing you raw performance. Let me point out that Coho is not a hyperconverged solution, it is a full storage system.

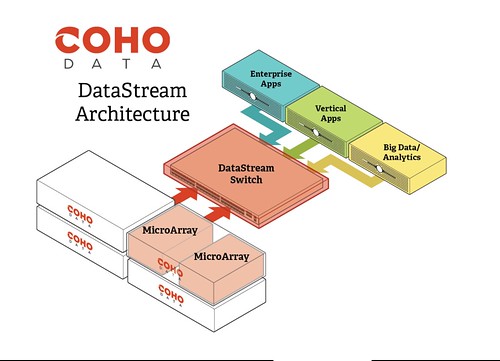

What does it look like? It is a 2U box which holds 2 “MicroArrays” which each MicroArray having 2 processors, 2 x 10GbE NIC port and 2 PCIe INTEL 910 cards. Each 2u block provides you 39TB of capacity and ~180K IOPS (Random 80/20 read/write, 4K block size). Starting at $2.50 per GB, pre-dedupe & compression (which they of course offer). Couple of things I liked looking at their architecture, first and probably foremost the “scale-out” architecture, scale to infinity is what they say in a linear fashion. On top of that, it comes with an OpenFlow-enabled 10GbE switch to allow for ease of management and again scalability.

If you look closely at how they architected their hardware, they created these highspeed IO lanes: 10GbE NIC <–> CPU <–> PCIe Flash Unit. Each highway has its dedicated CPU, NIC Port, ad on top of that they PCIe Flash, allowing for optimal performance, efficiency and fine grained control. Nice touch if you ask me.

Another thing I really liked was their UI. You can really see they put a lot of thought in the user experience aspect by keeping things simple and presenting data in an easy understandable way. I wish every vendor did that. I mean, if you look at the screenshot below how simple does that look? Dead simple right!? I’ve seen some of the other screens, like for instance for creating a snapshot schedule… again same simplicity. Apparently, and I have not tested this but I will believe them on their word, they brought that simplicity all the way down to the “install / configure” part of things. Getting Coho Data up and running literally only takes 15 minutes.

What I also liked very much about the Coho Data solution is that Software-defined Networking (SDN) and Software-defined Storage (SDS) are tightly coupled. In other words, Soho configures the network for you… As just said, it takes 15 minutes to setup. Try creating the zoning / masking scheme for a storage system and a set of LUNs these days, even that takes more time then 15 – 20 minutes. There aren’t too many vendors combining SDN and SDS in a smart fashion today.

When they briefed me they gave me a short demo and Andy explained the scale-out architecture, during the demo it happened various times that I could draw a parallel between the VMware virtualization platform and their solution which made is easy for me to understand and relate to their solution. For instance, Soho Data offers what I would call DRS for Software-Defined Storage. If for whatever reasons defined policies are violated then Coho Data will balance the workload appropriately across the cluster. Just like DRS (and Storage DRS) does, Coho Data will do a risk/benefit analysis before initiating the move. I guess the logical question would be, well why would I want Coho to do this when VMware can also do this with Storage DRS? Well keep in mind that Storage DRS works “across datastores”, but as Coho presents a single datastore you need something that allows you to balance within.

I guess the question then remains what do they lack today? Well today as a 1.0 platform Coho doesn’t offer replication to outside of their own cluster. But considering they have snapshotting in place I suspect their architecture already caters for it, and it something they should be able to release fairly quickly. Another thing which is lacking today is a vSphere Web Client plugin, but then again if you look at their current UI and the simplicity of it I do wonder if there is any point in having one.

All in all, I have been impressed by these newcomers in the SDS space and I can’t wait to play around with their gear at some point!

This seems to becoming a true series, introducing startups… Now in the case of

This seems to becoming a true series, introducing startups… Now in the case of