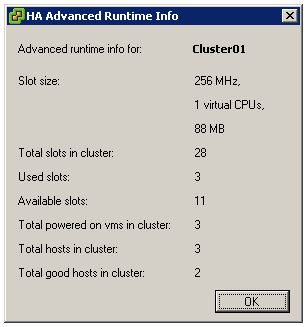

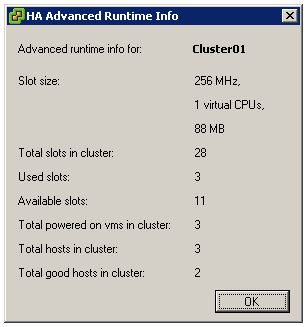

I discussed slot sizes a week ago but forgot to add a screenshot of a great new vSphere feature which reports slot info of a cluster.

I just love vSphere!

by Duncan Epping

Duncan Epping · ·

I discussed slot sizes a week ago but forgot to add a screenshot of a great new vSphere feature which reports slot info of a cluster.

I just love vSphere!

Duncan Epping · ·

HyTrust just published info on their latest and greatest version of their appliance which will be released on the 24th of August and will carry version number 1.5. Hytrust sits between your virtual environment and the admin and enforces granular authorization of all virtual infrastructure management operations, according to user role, object, label, protocol and IP address. If you will attend VMworld I suggest you head over to their booth and ask for a demo.

Additional New Features:

Duncan Epping · ·

Scott Drummonds just posted a new blog article which deals about an upcoming VMware PSO offering. When Scott Drummonds is involved you know the topic of this offering is performance. In this case it’s performance related to SQL databases and I/O bottlenecks, which is probably the most reported issue. As Scott explains briefly they were able to identify the issue rather quickly by monitoring the physical servers and the virtual environment.

I guess the quote of Scott’s article captures the essence:

In the customer’s first implementation of the virtual infrastructure, both SQL Servers, X and Y, were placed on RAID group A. But in the native configuration SQL Server X was placed on RAID group B. This meant that the storage bandwidth of the physical configuration was approximately 1850 IOPS. In the virtual configuration the two databases shared a single 800 IOPS RAID volume.It does not take a rocket scientist to realize that users are going to complain when a critical SQL Server instances goes from 1050 IOPS to 400. And this was not news to the VI admin on-site, either. What we found as we investigated further was that virtual disks requested by the application owners were used in unexpected and undocumented ways and frequently demanded more throughput than originally estimated. In fact, through vscsiStats analysis (Using vscsiStats for Storage Performance Analysis), my contact and I were able to identify an “unused” VMDK with moderate sequential IO that we immediately recognized as log traffic. Inspection of the application’s configuration confirmed this.

Duncan Epping · ·

This is the whitepaper I’ve been waiting for. By now we all know that the CPU Scheduler has changed. The only problem is that there wasn’t any official documentation about what changed and where we would benefit. Well this has changed. VMware just published a new whitepaper titled “The CPU Scheduler in VMware® ESX™ 4“.

The CPU scheduler in VMware ESX 4 is crucial to providing good performance in a consolidated environment. Since most modern processors are equipped with multiple cores per processor, systems with tens of cores running hundreds of virtual machines are common. In such a large system, allocating CPU resource efficiently and fairly is critical. In ESX 4, there are significant changes to the ESX CPU scheduler that improve performance and scalability. This paper describes these changes and their impact. This paper also provides details of the CPU scheduling algorithms in the ESX server.

I can elaborate all I want but I need you guys to read the whitepaper to understand why vSphere is performing a lot better than VI 3.5. (I will give you a hint: “cell”.)

Another whitepaper that’s definitely worth reading is “Virtual Machine Monitor Execution Modes: in VMware vSphere 4.0“.

The monitor is a thin layer that provides virtual x86 hardware to the overlying operating system. This paper contains VMware vSphere 4.0 default monitor modes chosen for many popular guests running modern x86 CPUs. While most workloads perform well under these default settings, a user may derive performance benefits by overriding the defaults. The paper examines situations where manual monitor mode configuration may be practical and provides two ways of changing the default monitor mode of the virtual machine in vSphere.

And while you arealready taking the time off to educate yourself you might also want to read the “FT Architecture and Performance” whitepaper. Definitely worth reading!

Duncan Epping · ·

This has always been a hot topic, HA and Slot sizes/Admission Control. One of the most extensive (Non-VMware) articles is by Chad Sakac aka Virtual Geek, but of course since then a couple of things has changed. Chad commented on my HA Deepdive if I could address this topic, here you go Chad.

Lets start with the basics.

What is a slot?

A slot is a logical representation of the memory and CPU resources that satisfy the requirements for any powered-on virtual machine in the cluster.

In other words a slot size is the worst case CPU and Memory reservation scenario in a cluster. This directly leads to the first “gotcha”:

HA uses the highest CPU reservation of any given VM and the highest memory reservation of any given VM.

If VM1 has 2GHZ and 1024GB reserved and VM2 has 1GHZ and 2048GB reserved the slot size for memory will be 2048MB+memory overhead and the slot size for CPU will be 2GHZ.

Now how does HA calculate how many slots are available per host?

Of course we need to know what the slot size for memory and CPU is first. Then we divide the total available CPU resources of a host by the CPU slot size and the total available Memory Resources of a host by the memory slot size. This leaves us with a slot size for both memory and CPU. The most restrictive number is the amount of slots for this host. If you have 25 CPU slots but only 5 memory slots the amount of available slots for this host will be 5.

As you can see this can lead to very conservative consolidation ratios. With vSphere this is something that’s configurable. If you have just one VM with a really high reservation you can set the following advanced settings to lower the slot size being used during these calculations: das.slotCpuInMHz or das.slotMemInMB. To avoid not being able to power on the VM with high reservations these VM will take up multiple slots. Keep in mind that when you are low on resources this could mean that you are not able to power-on this high reservation VM as resources are fragmented throughout the cluster instead of located on a single host.

Now what happens if you set the number of allowed host failures to 1?

The host with the most slots will be taken out of the equation. If you have 8 hosts with 90 slots in total but 7 hosts each have 10 slots and one host 20 this single host will not be taken into account. Worst case scenario! In other words the 7 hosts should be able to provide enough resources for the cluster when a failure of the “20 slot” host occurs.

And of course if you set it to 2 the next host that will be taken out of the equation is the host with the second most slots and so on.

One thing worth mentioning, as Chad stated with vCenter 2.5 the number of vCPUs for any given VM was also taken in to account. This led to a very conservative and restrictive admission control. This behavior has been modified with vCenter 2.5 U2, the amount of vCPUs is not taken into account.