Yesterday I got an email about configuring VXLAN. I was in the middle of re-doing my lab so I figured this would be a nice exercise. First I downloaded vShield Manager and migrated from regular virtual switches to a Distributed Switch environment. I am not going to go in to any depth around how to do this, this is fairly straight forward. Just right click the Distributed Switch and select “Add and Manage Hosts” and follow the steps. If you wondering what the use-case for VXLAN would be I recommend reading Massimo’s post.

VXLAN is an overlay technique and encapsulates layer 2 in layer 3. If you want to know how this works technically you can find the specs here. I wanted to create a virtual wire in my cluster. Just assume this is a large environment, I have many clusters and many virtual machines. In order to provide some form of isolation I would need to create a lot of VLANs and make sure these are all plumbed to the respective hosts… As you can imagine, it is not as flexible as one would hope. In order to solve this problem VMware (and partners) introduced VXLAN. VXLAN enables you to create a virtual network, aka a virtual wire. This virtual wire is a layer 2 segment and while the hosts might be in different networks the VMs can still belong to the same.

I deployed the vShield virtual appliance as this is a requirement for using VXLAN. After deploying it you will need to configure the network. This is fairly simple:

- Login to the console of the vShield Manager (admin / default)

- type “enable” (password is “default”)

- type “setup” and provide all the required details

- log out

Now the vShield Manager virtual appliance is configured and you can go to “https://<ip addres of vsm>/. You can login using admin / default. Now you will need to link this vShield Manager to vCenter Server:

- Click “Settings & Reports” in the left pane

- Now you should be on the “Configuration” tab in the “General” section

- Click edit on the “vCenter Server” section and fill out the details (ip or hostname / username / password)

Now you should see some new shiny bright objects in the left pane when you start unfolding:

Now lets get VXLAN’ing

- Click your “datacenter object” (in my case that is “Cork”)

- Click the “Network virtualization” tab

- Click “Preparation” –> “Connectivity“

- Click “Edit” and tick your “cluster(s)” and click “Next“

- I changed the teaming policy to “failover” as I have no port channels configured on my physical switches, depending on your infra make the changes required and click “finish“

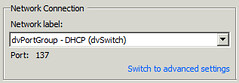

An agent will now be installed on the hosts in your cluster. This is a “vib” package that handles VXLAN traffic and a new vmknic is created. This vmknic is created with DHCP enabled, if needed in your environment you can change this to a static address. Lets continue with finalizing the preparation.

- Click “Segment ID“

- Enter a pool of Segment IDs, note that if you have multiple vSMs this will need to be unique as a segment ID will be assigned to a virtual wire and you don’t want virtual wires with the same ID. I used “5000 – 5900”

- Fill out the “Multicast address range“, I used 225.1.1.1-225.1.4.254

Now that we have prepped the host we can begin creating a virtual wire. First we will create a network scope, the scope is the boundary of your virtual network. If you have 5 clusters and want them to have access to the same virtual wires you will need to make them part of the same network scope

- Click “network scopes“

- Click the “green plus” symbol to “add a network scope“

- Give the scope a name and select the clusters you want to add to this network scope

- Click “OK“

Now that we have defined our virtual network boundaries aka “network scope” we can create a virtual wire. The virtual wire is what it is all about, a “layer 2” segment.

- Click “networks“

- Click the “green plus” symbol to “create a VXLAN network“

- Give it a name

- Select the “network scope“

In the example below you see two virtual wires…

Now you have created a new virtual wire aka VXLAN network. You can add virtual machines to it by simply selecting the network in the NIC config section. The question of course remains, how do you get in / out of the network? You will need a vShield Edge device. So lets add one…

- Click “Edges“

- Click the “green plus” symbol to “add an Edge“

- Give it a name

- I would suggest, if you have this functionality, to tick the “HA” tickbox so that Edge is deployed in an “active/passive” fashion

- Provide credentials for the Edge device

- Select the uplink interface for this Edge

- Specify the default gateway

- Add the HA options, I would leave this set to the default

- And finish the config

Now if you had a virtual wire, and it needed to be connected to an Edge (more than likely) make sure to connect the virtual wire to the Edge by going back to “Networks”. Select the wire and then the “actions dial” and click “Connect to Edge” and select the correct edge device.

Now that you have a couple of wires you can start provisioning VMs or migrating VMs to them. Simply add them to the right network during the provisioning process.