Over the last couple of weeks, basically since VSAN was launched, I noticed something and I figured I would blog about it. Many people seem to be under the impression that the VSAN Ready Nodes are your only option if you want to buy new servers to run VSAN on. This is definitely NOT the case. VSAN Ready Nodes are a great solution for people who do not want to bother going through the exercise of selecting components themselves from the VSAN HCL. However, the process is not as complicated as it sounds.

There are a couple of “critical aspects” when it comes to configuring a VSAN host and those are:

- Server which is on the vSphere HCL (pick any)

- SSD, Disk Controller and HDD which is on the VSAN HCL: vmwa.re/vsanhcl

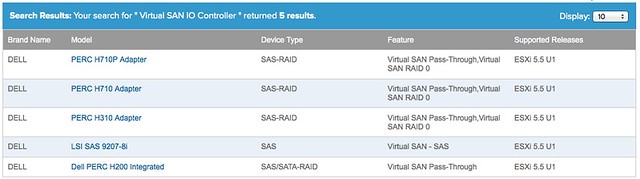

Yes that is it! So if you look at the current list of Ready Nodes for instance, it contains a short list of Dell Servers (T620 and R720). However the vSphere HCL has a long list of Dell Servers, and you can use ANY of those. You just need to make sure your VSAN (critical) components are certified, and you can simply do that using the VSAN HCL. For instance, even the low end PowerEdge R320 can be configured with components that are supported by VSAN today as it supports the H710 and the H310 disk controller which are also on the VSAN HCL.

So let me recap that: You can select ANY host from the vSphere HCL, as long as you ensure the SSD / Disk Controller and HDD are on the VSAN HCL you should be good.

Yesterday Maish and Christian had a nice little back and forth on their blogs about VSAN. Maish published a post titled “

Yesterday Maish and Christian had a nice little back and forth on their blogs about VSAN. Maish published a post titled “