I suspect that the majority of blogs this week will all be about Virtual SAN, Cloud Native Apps and EVO. If you ask me then the vSphere APIs for IO Filtering announcements are just as important. I’ve written about VAIO before, in a way, and it was first released in vSphere 6.0 and opened to a select group of partners. For those who don’t know what it is, lets recap, the vSphere APIs for IO Filtering is a framework which enables VMware partners to develop data services for vSphere in a fully supported fashion. VMware worked closely with EMC and Sandisk during the design and development phase to ensure that VAIO would deliver what partners would require it to deliver.

These data services can be applied to on a VM or VMDK granular level and can be literally anything by simply attaching a policy to your VM or VMDK. In this first official release however you will see two key use cases for VAIO though:

- Caching

- Replication

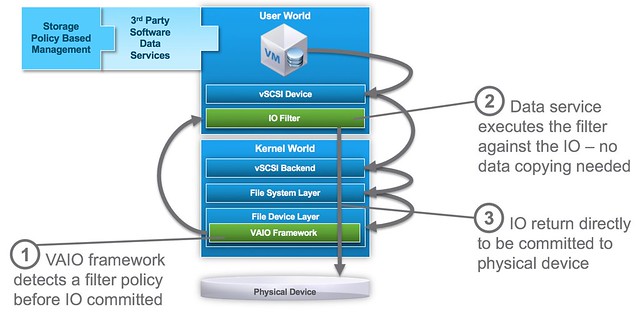

The great thing about VAIO if you ask me is that it is an ESXi user space level API, which over time will make it possible for all the various data services providers (like Atlantis, Infinio etc) who now have a “virtual appliance” based solution to move in to ESXi and simplify their customers environment by removing that additional layer. (To be technically accurate, VAIO APIs are all user level APIs, the filters are all running in user space, only a part of the VAIO framework runs inside the kernel itself.) On top of that, as it is implemented on the “right” layer it will be supported for VMFS (FC/iSCSI/FCoE etc), NFS, VVols and VSAN based infrastructures. The below diagram shows where it sits.

VAIO software services are implemented before the IO is directed to any physical device and does not interfere with normal disk IO. In order to use VAIO you will need to use vSphere 6.0 Update 1. On top of that of course you will need to procure a solution from one of the VMware partners who are certified for it, VMware provides the framework – partners provide the data services!

As far as I know the first two to market will be EMC and Sandisk. Other partners who are working on VAIO based solutions and you can expect to see release something are Actifio, Primaryio, Samsung, HGST and more. I am hoping to be able to catch up with one or two of them this week or over the course of the next week so I can discuss it a bit more in detail.