I’ve been doing Citrix XenApp performance tests over the last couple of days. Our goal was simple: as many user sessions on a single ESX host as possible, not taking per VM cost in account. Reading the Project VRC performance tests we decided to give both 1 vCPU VM’s and 2 vCPU VM’s a try. Because the customer was using brand new Dell hardware with AMD processors we also wanted to test with “virtualized MMU” set to forced. For a 32Bit Windows OS this setting needs to be set to force other wise it will not be utilized. (Alan Renouf was so kind to write a couple of lines of Powershell that enabled this feature for a specific VM, Cluster or just every single VM you have. Thanks Alan!)

We wanted to make sure that the user experience wasn’t degraded and that ESX would still be able to schedule tasks within a reasonable %RDY Time, < 20% per VM. Combine the 1vCPU, 2vCPU with and without virtualized MMU and you’ve got 4 test situations. Like I said our goal was to get as much user sessions on a box as possible. Now we didn’t conduct a real objective well prepared performance test so I’m not going to elaborate on the results in depth, in this situation 1vCPU with virtualized MMU and scale out of VMs resulted in the most user sessions per ESX host.

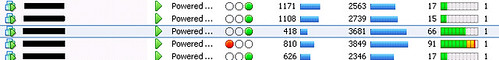

But that’s not what this article is about. It’s about virtualized MMU and the effect it has on Transparent Page Sharing aka TPS. One thing we noticed was that the amount of used memory in a VM with virtualized MMU enabled was higher than in a “normal” VM. In the following screenshot the third and fourth VM had virtualized MMU enabled. As you can see the amount of memory used is substantially higher while all are serving the same amount of user sessions.

We concluded that when virtualized MMU was set to “force” TPS didn’t take place or at least at a lower grade. But we’re no scientists / developers so I decided to sent an email to our engineers. Carl Warldspurger was one of the guys that replied to my email, Carl wrote the famous PDF on TPS and this is an out-take from his reply:

On an RVI-enabled system, we try to aggressively back all guest memory with large pages whenever possible. However, page-sharing works only with 4KB small pages, not with 2MB large pages.

After a quick search on the internet on “large pages” I found this PDF on Large Page performance that indeed supports our theory:

In ESX Server 3.5 and ESX Server 3i v3.5, large pages cannot be shared as copy‐on‐write pages. This means, the ESX Server page sharing technique might share less memory when large pages are used instead of small pages.

I can imagine you normally won’t overcommit memory on a cluster running Citrix XenApp VMs. With RVI enabled, and thus less immediate page-sharing, I would be careful with overcommitting. Don’t get me wrong, it’s not that you can’t overcommit on memory but it will have it’s effect:

When free machine memory is low and before swapping happens, the ESX Server kernel attempts to share identical small pages even if they are parts of large pages. As a result, the candidate large pages on the host machine are broken into small pages.

This can degrade performance at a point in time when you don’t want performance degradation. Keep this in mind when deploying VMs with virtualized MMU enabled, make your decision based on these facts! Do performance testing on what effect overcommiting will have on your environment when virtualized MMU is enabled.

By the way I also noticed that Virtualfuture.info just released a post on the 1 vCPU vs 2 vCPU topic. They support the Project VRC outcome. Our outcome was different, but like I said our testing methodology wasn’t consistent in anyway and we probably had different goals and criteria. I’m also wondering if the guys from Project VRC and/or Virtualfuture.info checked what the effect was on %RDY when additional vCPU’s were added.

Do 64 bit guests have to use virtualized MMU? If so, would running many 64 bit VMs cause the same effect of lowering page sharing as forcing the 32 VM’s virtualized MMU?

No they don’t but you need to specifically switch it off.

Hi Duncan, very interesting point but when said “might share less memory when large pages are used”, can we know how many ?

FileBling.com – Prepaid File Sharing is your only solution to earning lots of money with your downloads and uploads. They offer the best pay out system for anyone, and with the fastest downloads than any other file sharing system! Give them a try today and see what the hype is about!

I assume we are talking about esx 4.0? How does this stack up on the newer hardware systems like the Nahalems or even with 4.1? Just curious because TPS and vMMU seems to be talked about alot still. Even on the forums. You input is appreciated!

same things still applies. large pages are not deduplicated until there is contention.

Hi Duncan, since this is causing what i’d refer to as a “false positive” as far as vCenter alarms are concerned, would it make more sense for vCenter to alarm on memory active instead of memory consumed for vm’s where MMU is enabled and there is not any contention on the host.

Thanks,

Rob