Some of you may have noticed it already, and some may not, but a lot of the configuration details that were traditionally stored in “esx.conf” have now moved elsewhere. The question is where did it go? Well it went into “configstore” and with “configstore” now also comes a commandline interface called “configstorecli”. I briefly mentioned this in a previous post a few weeks ago. Today I noticed a question on VMTN around renaming a vswitch on a host and how you can do this now that the vswitch details have disappeared from esx.conf.

I figured I should be able to test this in my lab and write a short howto. So here we go.

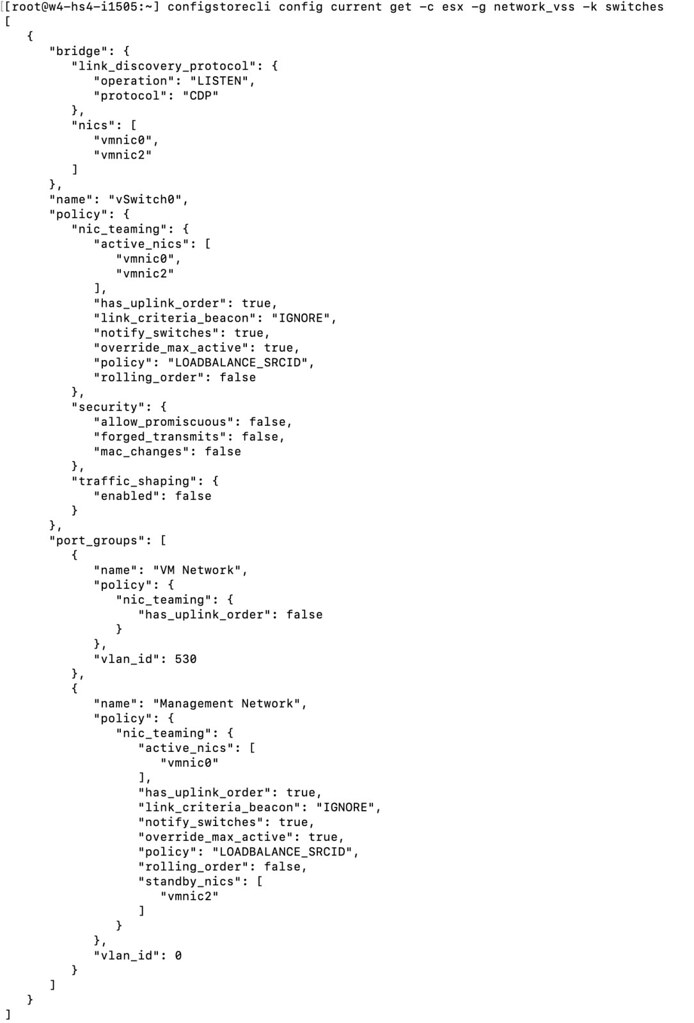

You can look at the current network configuration for your vSwitch using the following command:

configstorecli config current get -c esx -g network_vss -k switches

Then what you can do is dump the info in a json file, which you will then be able to edit:

configstorecli config current get -c esx -g network_vss -k switches > vswitch.json

The file will look something like this:

After you made the required changes, you then load the configuration using the json file:

configstorecli config current set -c esx -g network_vss -k switches -i vswitch.json --overwrite

I changed the name of my vSwitch0 to “vSwitchDuncan” and as you can see below, the change worked! Although do note, you will need to reboot the host before you see the change!

For those who prefer video content, I also created a quick demo which shows the above process: