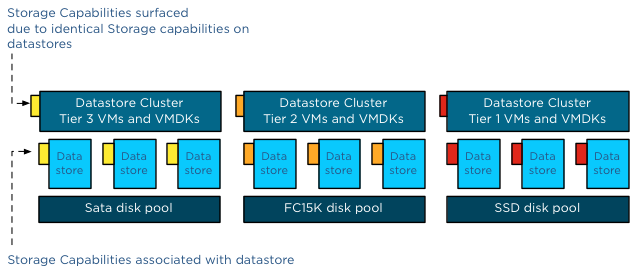

This question around adding different tiers of storage in a single Storage DRS datastore cluster keeps popping up every once in a while. I can understand where it is coming from as one would think that VM Storage Profiles combined with Storage DRS would allow you to have all types of tiers in one cluster, but then balance within that “tier” within that pool.

Truth is that that does not work with vSphere 5.1 and lower unfortunately. Storage DRS and VM Storage Profiles (Profile Driven Storage) are not tightly integrated. Meaning that when you provision a virtual machine in to a datastore cluster and Storage DRS needs to rebalance the cluster at one point, it will consider ANY datastore within that datastore cluster as a possible placement destination. Yes I agree, it is not what you hoped for… it is – what it is. (feature request filed) Frank visualized this nicely in his article a while back:

So when you architect your datastore clusters, there are a couple of things you will need to keep in mind. These are the design rules at a minimum, that is if you ask me:

- LUNs of the same storage tier

- See above

- More LUNs = more balancing options

- Do note size matters, a single LUN will need to be able to fit your largest VM!

- Preferably LUNs of the same array (so VAAI offload works properly)

- VAAI XCOPY (used by SvMotion for instance) doesn’t work when going from Array-A to Array-B

- When replication is used, LUNs that are part of the same consistency group

- You will want to make sure that VMs that need to be consistent from a replication perspective are not moved to a LUN that is outside of the consistency group

- Similar availability characteristics and performance characteristics

- You don’t want potential performance or availability to degrade when a VM is moved

Hope this helps,