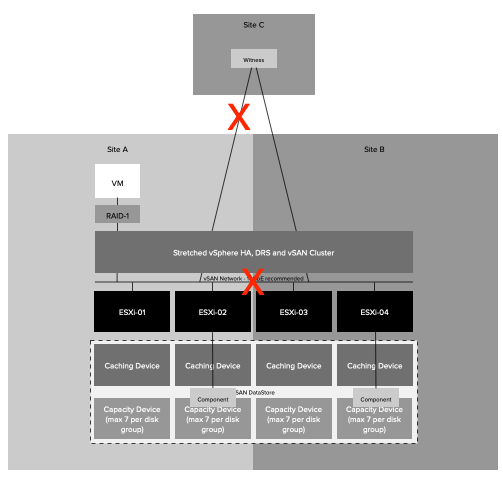

I have seen this question being asked a couple of times in the past months, and to be honest I was a bit surprised people asked about this. Various customers were wondering if it is supported to mix versions of ESXi in the same vSphere or vSAN Cluster? I can be short whether this is support or not, yes it is but only for short periods of time (72hrs max). Would I recommend it? No, I would not!

Why not? Well mainly for operational reasons, it just makes life more complex. Just think about a troubleshooting scenario, you now need to remember which version you are running on which host and understand the “known issues” for each version. Also, for vSAN things are even more complex as you could have “components” running on a different version of ESXi. On top of that, it could even be the case that a certain command or esxcli namespace is not available on a particular version of ESXi.

Another concern is when doing upgrades or updates, you need to take the current version into account when updating, or more importantly when upgrading! Also, remember that firmware/driver combination may be different for a particular version of vSphere/vSAN as well, this could also make life more complex and definitely increases the chances of making mistakes!

Is this documented anywhere? Yes, check out the following KB:

A couple of months ago I was asked if I wanted to write a foreword for an upcoming ebook. I have done this various times, but this one was particularly interesting. Why? Well, there are 3 good reasons:

A couple of months ago I was asked if I wanted to write a foreword for an upcoming ebook. I have done this various times, but this one was particularly interesting. Why? Well, there are 3 good reasons: