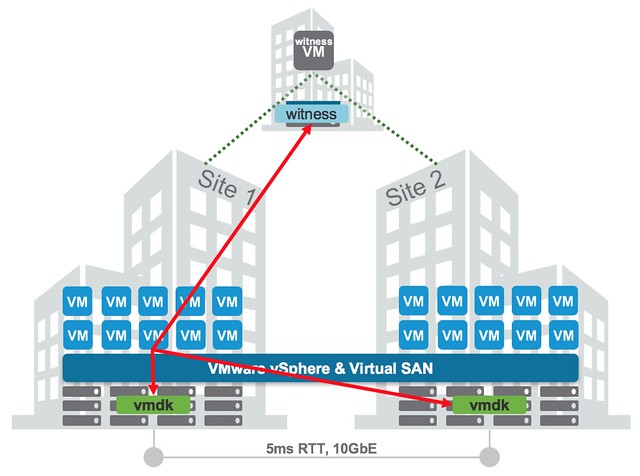

Over the past couple of weeks I have had some interesting questions from folks about different VSAN Stretched failure scenarios, in particular what happens during a VSAN Stretched Cluster site partition. These questions were in particular about site partitions and how HA and VSAN know which VMs to fail-over and which VMs to power-off. There are a couple of things I like to clarify. First lets start with a diagram that sketches a stretched scenario. In the diagram below you see 3 sites. Two which are “data” sites and one which is used for the “witness”. This is a standard VSAN Stretched configuration.

The typical question now is, what happens when Site 1 is isolated from Site 2 and from the Witness Site? (While the Witness and Site 2 remain connected.) Is the isolation response triggered in Site 1? What happens to the workloads in Site 1? Are the workloads restarted in Site 2? If so, how does Site 2 know that the VMs in Site 1 are powered off? All very valid questions if you ask me, and if you read the vSphere HA deepdive on this website closely and letter for letter you will find all the answers in there, but lets make it a bit easier for those who don’t have the time.

First of all, all the VMs running in Site 1 will be powered off. Let is be clear that this is not done by vSphere HA, this is not the result of an “isolation” as technically the hosts are not isolated but partitioned. The VMs are killed by a VSAN mechanism and they are killed because the VMs have no access to any of the components any longer. (Local components are not accessible as there is no quorum.) You can disable this mechanism by the way, although I discourage you from doing so, through the advanced host settings. Set the advanced host setting called VSAN.AutoTerminateGhostVm to 0.

In the second site a new HA master node will be elected. That master node will validate which VMs are supposed to be powered on, it knows this through the “protectedlist”. The VMs that were on Site 1 will be missing, they are on the list, but not powered on within this partition… As this partition has ownership of the components (quorum) it will now be capable of powering on those VMs.

Finally, how do the hosts in Partition 2 know that the VMs in Partition 1 have been powered off? Well they don’t. However, Partition 2 has quorum (Quorum meaning that is has the majority of the votes / components (2 our of 3) and as such ownership and they do know that this means it is safe to power-on those VMs as the VMs in Partition 1 will be killed by the VSAN mechanism.

I hope that helps. For more details, make sure to read the clustering deepdive, which can be downloaded here for free.

Duncan,

I feel you are talking about two different things here, and I don’t know how much intercommunication is there between them, but it would be great to have a document that states it clearly. Namely, HA and VSAN.

So far, I had the idea that quorum is not a mechanism that HA uses. Now you say “partition 2 has quorum and .. it is safe to power on”. This sentence crosses the HA – VSAN boundary 🙂

But so does the AutoTerminateGhostVM feature, I guess.

I had the impression that VSAN loss of quorum would just drop the storage service, and HA would react by BAU (business as usual), master able to lock the DS of a VM lost track of ESXi in charge, so power up. On the other side, oops, APD.

-Carlos

“it has quorum” as in “majority”. HA doesn’t use the quorum mechanism itself. But in this case VSAN is the storage system and VSAN controls which partition has access to disk. In this case the partition which has access to the majority of the components of the VM will have access. HA + VSAN does not support APD handling today… hence the need to the HA kill mechanism as described. Hope this helps

Hello!

I have tested this VSAN configuration (with shutdown LAN and VSAN network on preffered site) and I have got very strange result. After I switch off LAN and VSAN network on preffered site all VMs from preffered site were restarted on secondary site – all are OK. But when I switch on LAN and VSAN network on preffered site than I see that all VSAN cluster VMs are restarted again – it’s no good!

that is more than likely caused my a network misconfiguration. HA does not randomly restart VM. Look in your FDM log for host isolations

I configure IP address from VSAN network in das.isolationaddress0 option, which source vmk interface ESXi HA agent use when try to ping this IP address – vmk interface for management network or vmk interface for VSAN network?

If vmk interface for VSAN than I don’t see any problem with network, because all ESXi can successfully ping this IP address from CLI command (vmkping -I ‘vmkVSAN’ ‘IP address’)..

Do you have any recommendation for the case when one of the sites is permanently (or for a long time) out of operation? How to restore data redundancy in the remaining site to eliminate single point of failure?

If I have a “spare” hardware can I split remaining site to two groups of hosts to “simulate” second site and let the VSAN to create replica or “merge” stretched cluster to standard cluster?

Thanks.

you can just break down the current stretched cluster in the UI and build a new one and VSAN will do the rest for you indeed.